HAProxy 3.2 is here, and this release gives you more of what matters most: exceptional performance and efficiency, best-in-class SSL/TLS, deep observability, and flexible control over your traffic. These powerful capabilities help HAProxy remain the G2 category leader in API management, container networking, DDoS protection, web application firewall (WAF), and load balancing.

Automatic CPU binding simplifies management and squeezes more performance out of large-scale, multi-core systems. Experimental ACME protocol support helps automate the loading of TLS files from certificate authorities such as Let's Encrypt and ZeroSSL. Improvements to the Runtime API and Prometheus exporter make it easier to monitor your load balancers and inspect traffic. QUIC protocol support is now faster, more reliable on lossy networks, and more resource-efficient. There’s even an easter egg in store for fans of Lua scripting!

In this blog post, we’ll explore all the latest changes in detail. As always, enterprise customers can expect to find these features included in the next version of HAProxy Enterprise.

Watch our webinar HAProxy 3.2: Feature Roundup and listen to our experts as we examine new features and updates and participate in the live Q&A.

New to HAProxy?

HAProxy is the world’s fastest and most widely used software load balancer. It provides high availability, load balancing, and best-in-class SSL processing for TCP, QUIC, and HTTP-based applications.

HAProxy is the open source core that powers HAProxy One, the world’s fastest application delivery and security platform. The platform consists of a flexible data plane (HAProxy Enterprise and HAProxy ALOHA) for TCP, UDP, QUIC and HTTP traffic; a scalable control plane (HAProxy Fusion); and a secure edge network (HAProxy Edge).

HAProxy is trusted by leading companies and cloud providers to simplify, scale, and secure modern applications, APIs, and AI services in any environment.

How to get HAProxy 3.2

You can install HAProxy version 3.2 in any of the following ways:

Install the Linux packages for Ubuntu / Debian.

Run it as a Docker container. View the Docker installation instructions.

Compile it from source. View the compilation instructions.

Performance improvements

HAProxy 3.2 brings performance improvements that enhance HAProxy’s efficiency and scalability on multi-core systems, reduce latency under heavy load, and optimize resource usage.

Automatic CPU binding

With version 3.2 comes great news for users with massively multi-core systems! Included in this release are significant enhancements that extend the CPU topology detection introduced in version 2.4.

Nearly two years in development, these changes enable more automatic behavior for HAProxy's CPU binding. CPU binding is the assignment of specific thread sets to specific CPU sets with the goal of optimizing performance. HAProxy's automatic CPU binding mechanism first analyzes the CPU topology of your specific system in detail, looking at the arrangement of CPU packages, NUMA nodes, CCX, L3 caches, cores, and threads. It then determines how it should most optimally group its threads, and it determines which CPUs the threads should run on to minimize the latency associated with sharing data between threads. Reducing this latency generally provides better performance.

Since version 2.4, efforts have been underway to significantly reduce HAProxy's need to share data between its threads. Version 3.2 includes significant updates that allow for better scaling of HAProxy's subsystems across multiple NUMA nodes to improve performance for CPU-intensive workloads, such as high data rates, SSL, and complex rules. These efforts enable HAProxy to more intelligently use multiple CPUs.

What does this mean for you? We've found in testing that for most systems, the CPU binding configuration that HAProxy determines automatically for your machine provides the best performance and most users should see no difference in configuration requirements. However, if you are using a large system with many cores and multiple CCX, or a heterogenous system with both "performance" and "efficiency" cores, some additional configuration tuning can lead to further performance gains.

Here are some considerations and scenarios where additional configuration is useful:

On systems with more than 64 threads, additional configuration is required to enable HAProxy to use more than 64 threads.

By default, HAProxy limits itself to a single NUMA node's CPUs to avoid performance overhead associated with communication across nodes. Though HAProxy avoids these expensive operations, it means that on large systems it does not automatically use all available hardware resources.

You may want to limit the CPUs on which HAProxy can run to a subset of available CPUs to leave resources available, for example, for your NIC or other system operations.

On heterogeneous systems, or systems with multiple cores of different types, such as those with both "performance" cores and "efficiency" cores, you may want HAProxy to use only one type of core.

On systems with multiple CCX or L3 caches, you will likely want HAProxy to automatically create thread groups to limit expensive data sharing between distant CPU cores.

Prior to version 3.2, these cases required additional, complex configuration that can be challenging to configure correctly for your specific system, and that is often difficult to manage across multiple systems and upgrades.

Version 3.2 introduces a middle ground between the default, automatic configuration and complex manual configurations, allowing you to instead use new, simple configuration directives to tune how you would like HAProxy to apply the automatic CPU binding. If you are already manually defining thread groups or cpu-maps, these enhancements can potentially reduce the complexity of your configuration file and make your configuration less platform-dependent.

These simple global configuration directives new to version 3.2 are cpu-policy and cpu-set. You can use cpu-set to symbolically define the specific CPUs on which you want HAProxy to run and cpu-policy to specify how you want HAProxy to group threads on those CPUs.

To see the results of the automatic CPU binding in action, or in other words, to see how HAProxy has arranged and grouped its threads, run HAProxy with the -dc command line option. It will log its current arrangement of threads, thread groups, and CPU sets. Example:

| sudo haproxy -f /etc/haproxy/haproxy.cfg -dc -c | |

| Thread CPU Bindings: | |

| Tgrp/Thr Tid CPU set | |

| 1/1-16 1-16 16: 0-15 |

Additional process management settings you apply, including cpu-policy, will affect this output. If the output from either running HAProxy with the -dc option or from running the command lscpu -e indicates that your system has multiple L3 caches, you could consider testing your configuration with a cpu-policy other than the default.

As for the case where you have multiple CCX or L3 caches, you can set cpu-policy to performance and HAProxy will automatically create thread groups to limit expensive data sharing between distant CPU cores. For example on a 64-core 3rd Gen AMD EPYC without any additional settings, by default only 64 threads are enabled, and all in the same thread group across the 8 CCX (which is very inefficient, as threads may then share data between distant CPUs):

| Thread CPU Bindings: | |

| Tgrp/Thr Tid CPU set | |

| 1/1-64 1-64 128: 0-127 |

Now with cpu-policy performance on the same system, all threads are enabled and they’re efficiently organized to deliver optimal performance:

| Thread CPU Bindings: | |

| Tgrp/Thr Tid CPU set | |

| 1/1-16 1-16 16: 0-7,64-71 | |

| 2/1-16 17-32 16: 8-15,72-79 | |

| 3/1-16 33-48 16: 16-23,80-87 | |

| 4/1-16 49-64 16: 24-31,88-95 | |

| 5/1-16 65-80 16: 32-39,96-103 | |

| 6/1-16 81-96 16: 40-47,104-111 | |

| 7/1-16 97-112 16: 48-55,112-119 | |

| 8/1-16 113-128 16: 56-63,120-127 |

If you are running on a heterogeneous system, where you have multiple types of cores, for example both "performance" and "efficiency" cores, you can set cpu-policy to performance to direct HAProxy to use only the larger (performance) cores, and HAProxy will automatically configure its threads and thread groups for those cores. This small configuration change could result in a performance boost in some areas such as stick-tables, queues, and using the leastconn and roundrobin load balancing algorithms.

There may be cases where you don’t want HAProxy to use specific CPUs, or you want it to run only on specific CPUs. You can use cpu-set for this. It allows you to symbolically notate which CPUs you want HAProxy to use. It also includes an option reset that will undo any limitation put in place on HAProxy, for example by taskset.

Use drop-cpu <CPU set> to specify which CPUs to exclude or only-cpu <CPU set> to include only the CPUs specified. You can also set this by node, cluster, core, or thread instead of by CPU set. Once you’ve defined your cpu-set, HAProxy then applies your cpu-policy to assign threads to the specific CPUs.

For example, if you want to bind only one thread to each core in only node 0, you can set cpu-set as follows:

| global | |

| cpu-set only-node 0 only-thread 0 | |

| cpu-policy performance |

You can then use the default cpu-policy (first-usable-node if none specified) or choose which one you want HAProxy to use, for example, performance, as is shown in the example above.

To learn more about these directives and other performance and hardware considerations for HAProxy, see our configuration tutorial.

Be sure to benchmark any performance-related configuration changes on your system to verify that the changes provide a performance gain on your specific system.

Other performance updates

HAProxy 3.2 also includes the following performance updates:

By fixing the fairness of the lock that the scheduler uses for shared tasks, heavily loaded machines (64 cores NUMA) will see less latency, typically 8x lower, and 300x fewer occurrences of latencies 32ms or above.

HAProxy will now interrupt the processing of TCP and HTTP rules in the configuration at every 50 rules, a number that's configurable, to perform other concurrent tasks. This will help keep latencies low for configurations that have hundreds of rules.

HAProxy servers with many CPU cores will see significantly better performance of queues in regards to CPU usage. Queues were refined to be thread group aware, favoring pending requests in the same group when a stream finishes, which reduces data sharing between CPU cores.

QUIC now supports a larger Rx window to significantly speed up uploads (POST requests).

The Runtime API's

waitcommand has been optimized to consume far less CPU while waiting for a server to be removable if you've set thesrv-removableargument, which will be especially relevant for users that add and remove many servers dynamically through the Runtime API.On a server with a 128-thread EPYC microprocessor, watchdog warnings were emitted occasionally under extreme contention on the mt_lists, indicating that some CPUs were blocked for at least 100ms. To solve this issue, we shortened the exponential back-off, which seemed too high for these CPUs.

Memory pools have been optimized. Previously, HAProxy merged similar pools that were the same size. Now, pools with less than 16 bytes of difference or 1% of their size will be merged. During a test of 1-million requests, this reduced pools from 48 to 36 and saved 3MB or RAM. The Runtime API command

show pools detailedwill now show which pools have been merged.The

leastconnload balancing algorithm, which is more sensitive to thread contention because it must use locking when moving the server's position after getting and releasing connections, shows a lower peak CPU usage in this version. By moving the server less often, we observed a performance improvement of 60% on x86 machines with 48 threads. On an Arm server with 64 threads, we saw a 260% improvement. While faster, the algorithm also became more fair than previous versions, which had to sacrifice fairness to maintain a decent level of performance. There's now much less difference between the most and least loaded servers.The

roundrobinload balancing algorithm now scales better on systems with many threads. Testing on a 64-core EPYC server with "cpu-policy performance" showed a 150% performance increase thanks to no longer accessing variables and locks from distant cores.The deadlock watchdog and thread dump signal handlers were reworked to address some of the remaining deadlock cases that users reported in version 3.1 and 3.2. The new approach minimizes inter-thread synchronization, resulting in much less CPU overhead when displaying a warning.

Performance for stick tables that sync updates from peers got a boost by changing the code to use a single, dedicated thread to update the tree, which reduces thread locking. On a server with 128 threads, speed increased from 500k-1M updates/second to 5-8M updates/second.

The default limit on the number of threads was raised from 256 to 1024.

The default limit on the number of thread groups was raised from 16 to 32.

TLS enhancements

HAProxy 3.2 introduces enhancements to TLS configuration and certificate management that make setups simpler and more flexible, while laying the groundwork for built-in certificate renewal via ACME.

ssl-f-use directive

This version makes it easier to configure multiple certificates for a frontend by expanding on the work done in version 3.0. Version 3.0 added the crt-store configuration section, which configures where HAProxy should look when loading your certificate and key files. Separating out that information into its own section gives better visibility to file location details and provides a more robust syntax for referring to TLS files. But this separation of concerns was only the beginning.

In version 3.2, it was time to address how those TLS files get referenced in a frontend, going beyond adding crt arguments to bind lines. Now, you can add one or more ssl-f-use directives to reference each certificate and key you want to use in a frontend. By putting this information onto its own line apart from bind, you can be more expressive, appending properties like minimum and maximum TLS versions, ALPN fields, ciphers, and signature algorithms. Before, to do that, you'd have to create an external crt-list file that defined those things. The ssl-f-use directive moves that information into the HAProxy configuration, negating the need for a crt-list file.

Using ssl-f-use directives also benefits frontends that use the QUIC protocol. QUIC requires a separate bind line. Having the ability to reference a certificate from a crt-store lets you cut down on duplication of the certificate information.

Here's an example that uses crt-store and ssl-f-use together. Note that we no longer set crt on the bind line.

| crt-store my_files | |

| crt-base /etc/haproxy/ssl/certs/ | |

| key-base /etc/haproxy/ssl/keys/ | |

| load crt "foo.com.crt" key "foo.com.key" alias "foo" | |

| load crt "bar.com.crt" key "bar.com.key" alias "bar" | |

| frontend mysite | |

| bind :80 | |

| bind :443 ssl | |

| ssl-f-use crt "@my_files/foo" ssl-min-ver TLSv1.2 | |

| ssl-f-use crt "@my_files/bar" ssl-min-ver TLSv1.3 | |

| use_backend webservers |

ACME protocol

With work to separate the loading of TLS files from their usage complete, the door has opened to loading TLS files from certificate authorities that support the ACME protocol, such as Let's Encrypt and ZeroSSL. For now, this feature is experimental and requires the global directive expose-experimental-directives and targets single load balancer deployments, although solutions for clusters of load balancers are coming in the future.

While this initial implementation supports only HTTP-01 challenges, support for DNS-01 challenges will come later through the Data Plane API. Already, HAProxy notifies the Data Plane API of all updates via the "dpapi" event ring so that it can automatically save newly generated certificates on disk. So adding future ACME functionality through the API will be natural. HAProxy will auto-renew certificates 7 days before expiration.

You can disable the ACME scheduler, which otherwise starts at HAProxy startup. The scheduler checks the certificates and initiates renewals. Set the global directive acme.scheduler to off.

Here's a short walkthrough of configuring HAProxy with Let's Encrypt.

Generate a dummy TLS certificate file, which we'll later overwrite with the Let's Encrypt certificate:

| openssl req -x509 \ | |

| -newkey rsa:2048 \ | |

| -keyout example.key \ | |

| -out example.crt \ | |

| -days 365 \ | |

| -nodes \ | |

| -subj "/C=US/ST=Ohio/L=Columbus/O=MyCompany/CN=example.com" | |

| cat example.key example.crt > example.com.pem |

Generate an account key for Let's Encrypt. This is optional, as HAProxy will generate one for you if you don't set it yourself.

| openssl genrsa -out account.key 2048 |

Update your HAProxy configuration as shown here, where:

the global section has

expose-experimental-directivesandhttpclient.resolvers.prefer ipv4.An acme section defines how we'll register with Let's Encrypt.

A crt-store section defines the location of our Let's Encrypt issued certificate. Note that you don't have to use a crt-store section. For small configurations, the arguments can all go onto the

ssl-f-useline.A frontend section responds to the Let's Encrypt challenge and uses the

ssl-f-usedirective to serve the TLS certificate bundle.

| global | |

| log /dev/log local0 info | |

| pidfile /var/run/haproxy.pid | |

| stats socket /var/run/haproxy.sock mode 660 level admin | |

| stats socket ipv4@*:9999 level admin | |

| stats timeout 30s | |

| master-worker | |

| expose-experimental-directives | |

| httpclient.resolvers.prefer ipv4 | |

| defaults | |

| log global | |

| mode http | |

| option httplog | |

| option dontlognull | |

| timeout connect 10m | |

| timeout client 10m | |

| timeout server 10m | |

| acme letsencrypt-staging | |

| directory https://acme-staging-v02.api.letsencrypt.org/directory | |

| account-key /etc/haproxy/account.key | |

| contact admin@example.com | |

| challenge HTTP-01 | |

| keytype RSA | |

| bits 2048 | |

| map virt@acme | |

| crt-store my_files | |

| crt-base /etc/haproxy/ | |

| key-base /etc/haproxy/ | |

| load crt "example.com.pem" acme letsencrypt-staging domains "example.com" alias "example" | |

| frontend mysite | |

| bind :80 | |

| bind :443 ssl | |

| http-request return status 200 content-type text/plain lf-string "%[path,field(-1,/)].%[path,field(-1,/),map(virt@acme)]\n" if { path_beg '/.well-known/acme-challenge/' } | |

| http-request redirect scheme https unless { ssl_fc } | |

| ssl-f-use crt "@my_files/example" | |

| use_backend webservers | |

| backend webservers | |

| balance roundrobin | |

| server web1 172.16.0.11:8080 check maxconn 30 | |

| server web2 172.16.0.12:8080 check maxconn 30 |

Call the Runtime API command acme renew to create a Let's Encrypt certificate.

| sudo apt install socat | |

| echo "acme renew @my_files/example" | socat stdio tcp4-connect:127.0.0.1:9999 |

By default, the certificate exists only in HAProxy's running memory. To save it to a file, call the Runtime API command dump ssl cert:

| echo "dump ssl cert @my_files/example" | sudo socat stdio tcp4-connect:127.0.0.1:9999 > /etc/haproxy/example.com.pem |

You can also use the acme status command to list running tasks.

Observability and debugging tools

HAProxy provides verbose logging capabilities that allow you to see exactly where a failed request ended. Sometimes the cause is an unreachable server, sometimes it's an ACL rule that denied the request, and sometimes the server never returned a response. There are many scenarios, and seeing HAProxy's stream state at disconnection in the logs is always a good place to start a root cause analysis.

In this version, you get a new tool for examining the reasons behind failed requests that goes beyond the existing stream state. Add the fetch method term_events to your access log to get a series of comma-separated values that indicate the multiple states of a request as its flowed through the load balancer.

| log-format "$HAPROXY_HTTP_LOG_FMT %[term_events]" |

The log entry will look like this:

| {,,e1,S1s1,,,F7} |

Clone the HAProxy GitHub repository, compile the term_events program, then run it to decode the values:

| git clone https://github.com/haproxy/haproxy.git | |

| cd haproxy | |

| make dev/term_events/term_events | |

| dev/term_events/term_events {,,e1,S1s1,,,F7} | |

| ### e1 : se:shutw | |

| ### S1s1 : STRM:shutw > strm:shutw | |

| ### F7 : FD:conn_err |

By exposing a clearer view of the multiple states of a request as it moves through HAProxy, term_events gives developers a powerful, structured way to debug failed requests that were previously difficult to analyze. This will make it easier to tell if a failed request represents a bug or a problem in the host infrastructure.

Prometheus exporter

The Prometheus exporter now provides the counter current_session_rate.

Runtime API

This version of HAProxy updates the Runtime API with new commands and options, making it easier to inspect, monitor, and fine-tune your load balancer without reloading the service.

Stick table commands support GPC/GPT arrays

The Runtime API commands that manage stick tables can now use arrays for the GPT and GPC stick table data types. Since the release of HAProxy 2.5, you've been able to define the data types gpc, gpc_rate, and gpt as arrays of up to 100 counters or tags. In this release, the following commands now support that syntax:

set tableclear tableshow table

debug counters

The debug counters command that was added in version 3.1 has been improved to show, in human-readable language, what large values correspond to. Also, new event counters that indicate a race condition in epoll were added. To see them, use:

| echo "debug counters" | sudo socat stdio tcp4-connect:127.0.0.1:9999 | grep epoll |

show events

The show events Runtime API command now supports the argument -0, which delimits events with \0 instead of a line break, allowing you to use rings to emit multi-line events to their watchers, similar to xargs -0.

show quic

The show quic Runtime API command now supports stream as a verbosity option. Other values are oneline and full. Setting stream enables an output that lists every active stream.

show sess

The show sess Runtime API command displays clients that have active streams connected to the load balancer. In version 3.2, you can filter the output to show streams attached to a specific frontend, backend, or server. This makes it easier to diagnose connection issues in high-traffic environments. We’ve also backported this change to version 3.1.

show ssl cert

The show ssl cert Runtime API command, which lists certificates used by a frontend, now displays all of the file names associated with each certificate, not just the main one. In setups with shared certificates spread across multiple files, this command gives you a complete view of what’s in use.

show ssl sni

The new show ssl sni Runtime API command returns a list of server names that HAProxy uses to match Server Name Indication (SNI) values coming from clients. It gets these server names from CN or SAN fields in its bound TLS certificates. Or it derives them from filters defined in a crt-list. Through SNI, HAProxy can find the right certificate to use for each client depending on the website they're trying to reach.

This command has a few other nice features too. It shows when the configured certificates will expire, shows each certificate's encryption type, and displays filters associated with the certificate. This is useful when managing multi-domain TLS setups.

| echo "show ssl sni" | \ | |

| sudo socat stdio tcp4-connect:127.0.0.1:9999 | column -t -s $'\t' | |

| # Frontend/Bind SNI Negative Filter Type Filename NotAfter NotBefore | |

| mysite//etc/haproxy/haproxy.cfg:30 bar.com - ecdsa /etc/haproxy/certs/bar.com/bar.ecdsa Jun 13 13:37:21 2024 GMT May 14 13:37:21 2024 GMT | |

| mysite//etc/haproxy/haproxy.cfg:30 bar.com - rsa /etc/haproxy/certs/bar.com/bar.rsa Jun 13 13:37:21 2024 GMT May 14 13:37:21 2024 GMT | |

| mysite//etc/haproxy/haproxy.cfg:30 foo.com - rsa /etc/haproxy/certs/foo.com/foo.pem Jan 4 15:57:13 2027 GMT Dec 5 15:57:13 2024 GMT | |

| mysite//etc/haproxy/haproxy.cfg:30 * - rsa /etc/haproxy/certs/foo.com/foo.pem Jan 4 15:57:13 2027 GMT Dec 5 15:57:13 2024 GMT | |

| mysite//etc/haproxy/haproxy.cfg:30 *.baz.com !secure.baz.com rsa /etc/haproxy/certs/baz.com/baz.pem Jan 4 15:57:13 2027 GMT Dec 5 15:57:13 2024 GMT |

trace

The trace Runtime API command gained a new trace source, ssl, that lets you trace SSL/TLS related events.

Load balancing Improvements

HAProxy 3.2 introduces several enhancements that give you greater control over how traffic is distributed, how resources are utilized, and how the load balancer manages idle connections and non-standard log formats.

New strict-maxconn argument

Initially, the maxconn argument limited the number of TCP connections to a backend server. As traffic handling evolved, this setting was changed to count the number of HTTP requests instead—since a single connection can transfer multiple requests and so counting requests rather than connections is a more accurate way to measure the load placed on a server (we talk about this further in our blog post "HTTP Keep-Alive, Pipelining, Multiplexing, and Connection Pooling").

With HAProxy 3.2, we're introducing the new strict-maxconn argument, restoring the historic behavior of applying maxconn to TCP connections. This gives users more control over connection counts, which is important for backend services that can only handle a limited number of open connections, regardless of how many requests are sent.

Compression

You can now set a minimum file size for HTTP compression to only compress files large enough to matter. Recall that HAProxy 2.8 introduced a new syntax for HTTP compression, where you can compress both responses and requests. The new directives in version 3.2 set minimum file sizes in bytes to limit which files to compress. By setting a minimum size, you can avoid unnecessary compression work and keep your system running more efficiently, especially under high load.

The example below compresses request and response files only if they're at least 256 bytes:

| backend web_servers | |

| filter compression | |

| compression direction response | |

| compression algo-res gzip | |

| compression type-res text/css text/html text/plain text/xml text/javascript application/javascript application/json | |

| compression minsize-res 256 | |

| compression offload |

Relaxed HTTP parsing

In the previous release, HAProxy introduced the backend directives accept-unsafe-violations-in-http-request and accept-unsafe-violations-in-http-response to allow a more relaxed parsing of HTTP messages that violate rules of the HTTP protocol, which can happen when communicating with non-compliant clients and servers such as those used by APIs. HAProxy 3.2 adds to that list of allowed violations the absence of expected WebSocket headers. Specifically, it allows HAProxy to accept WebSocket requests that are missing the Sec-WebSocket-Key HTTP header and responses missing the Sec-WebSocket-Accept HTTP header. These relaxed parsing options help you keep traffic flowing rather than rejecting requests due to minor protocol violations. This improves compatibility with a broader range of clients and servers without compromising overall stability.

Also, you can now set the HTTP response header content-length to 0. Some non-compliant applications need this with HTTP 101 and 204 responses.

While HAProxy has relaxed its parsing in these cases, it's become stricter in others. It's now more stringent about not permitting some characters in the authority and host HTTP headers.

Also, two new directives let you drop trailers from HTTP requests or responses, useful for removing sensitive information that shouldn't be exposed to clients:

option http-drop-request-trailers

option http-drop-response-trailers

A trailer is an additional field that the sender can add to the end of a chunked message to set extra metadata.

Load balancing syslog

The log-forward section supports two new directives that relax the rules for parsing log messages, allowing HAProxy to support a wider range of clients and servers when load balancing syslog messages:

option dont-parse-logoption assume-rfc6587-ntf

If you add the directive option dont-parse-log, a log-forward section will relay syslog messages without attempting to parse or restructure them. Use this to accommodate clients that send syslog messages that don't strictly conform to the RFC3164 and RFC5424 specifications. When you use this setting, also set format raw on the log directive to preserve the original message content.

The directive option assume-rfc6587-ntf helps HAProxy better deal with splitting log messages that are sent on the same TCP stream. Ordinarily, if HAProxy sees the "<" character, it uses a set of rules named non-transparent framing to split the log messages by looking for a beginning "<" character. With this directive, HAProxy always assumes non-transparent framing, even if the frame lacks the expected "<" character.

| log-forward syslog | |

| bind 0.0.0.0:514 | |

| dgram-bind 0.0.0.0:514 | |

| # don't parse the message | |

| option dont-parse-log | |

| # assume non-transparent framing | |

| option assume-rfc6587-ntf | |

| log backend@mylog-rrb local0 | |

| backend mylog-rrb | |

| mode log | |

| balance roundrobin | |

| server syslog1 udp@172.16.0.12:514 |

Another change is the addition of the option host directive, which lets you keep or replace the HOSTNAME field on the syslog message. Having the ability to control the HOSTNAME that the syslog server receives can make it easier for the syslog server to filter messages and divert them into the proper log files. Below, we set the field to the client's source IP address by specifying the replace strategy, but the directive supports several strategies other than replace.

| log-forward graylog | |

| bind :514 | |

| dgram-bind :514 | |

| # Replace HOSTNAME with the source IP address | |

| option host replace | |

| log backend@mylog-rrb local0 | |

| backend mylog-rrb | |

| mode log | |

| balance roundrobin | |

| server syslog1 udp@172.16.0.12:514 |

These enhancements allow HAProxy to support a broader range of syslog clients and servers that may produce non-standard log messages. By relaxing parsing rules and offering more control over message fields, you can better ensure logs are forwarded reliably and consistently.

Consistent hashing

When using the balance hash algorithm for consistent-hash load balancing, you can now set the directive hash-preserve-affinity to indicate what to do when servers become maxed out or have full queues. Consistent hashing configures the load balancer to maintain server affinity, but when a server is overwhelmed, blindly preserving that affinity can lead to issues. With hash-preserve-affinity, you can now reroute traffic to available servers while still maintaining affinity.

Check idle HTTP/2 connections

For HTTP/2, you can now enable liveness checks on idle frontend connections via the bind directive's idle-ping argument. If the client doesn't respond before the next scheduled test, the connection will be closed. You can also set idle-ping on server directives in a backend to perform liveness checks on idle connections to servers. This helps detect and clean up unused connections, making your frontend and backend more efficient.

Pause a request or response

A new response policy named pause lets you delay processing of a request or response for a period of time. For instance, you could slow down clients that exceed a rate limit. You can either hardcode a number of milliseconds or write an expression that returns it, so dynamic values are possible.

http-request pause { <timeout> | <expr> }http-response pause { <timeout> | <expr> }

Health checks to use idle connections

Specify the new server argument check-reuse-pool to have HAProxy reuse idle connections for performing server health checks instead of opening new connections. This more efficient approach lowers the number of connections the server has to deal with. It also shows a benefit when sending health checks over TLS, reducing the cost of establishing a secure session.

Reusing idle connections for health checks also becomes useful with reverse-HTTP, which is a feature introduced in version 2.9. Here it allows you to check application servers connected to HAProxy, reusing their permanent connections.

QUIC protocol

When you choose the QUIC congestion control algorithm with the quic-cc-algo directive, it now automatically enables pacing on top of the chosen algorithm. It had been an opt-in, experimental feature before. Pacing smooths the emission of data to reduce network losses and has shown performance increases of approximately 10-20 fold over lossy networks or when communicating with slow clients at the expense of a higher CPU usage in HAProxy.

A side effect is that you can set the Bottleneck Bandwidth and Round-trip Propagation Time algorithm, which relies on pacing, without enabling experimental features. Set bbr. Or if you don't want pacing, disable it completely with tune.quic.disable-tx-pacing.

This version also massively improves QUIC upload performance. Previous versions only supported the equivalent of a single buffer in flight, which would limit the upload bandwidth to about 1.4 Mbps per stream, which was quite slow for users attempting to upload large images or videos. Starting with 3.2, uploading streams can use up to 90% (by default) of the memory allocated to the connection, allowing them to use the full bandwidth even with a single stream. You can adjust this ratio by using the global directive tune.quic.frontend.stream-data-ratio, allowing you to prioritize fairness (small values) or throughput (higher values). The default setting should suit common, web scenarios by striking a balance.

Another new, global setting is tune.quic.frontend.max-tx-mem, which caps the total memory that the QUIC tx buffers can consume, helping to moderate the congestion window so that the sum of the connections don't allocate more than that. By default, there's no limitation.

One other update is that the QUIC TLS API was ported to OpenSSL 3.5, ensuring that HAProxy's LTS version supports the LTS OpenSSL version released at the same time.

Overall, users will benefit from better QUIC performance out of the box and better control over bandwidth allocation across streams.

Master CLI

When using the Master CLI to call commands against workers, you can type an @ sign to indicate which worker by its relative PID. In version 3.2, you can use two @ signs to stay in interactive mode until it exits or until the command completes. Typically, the Data Plane API will use this to subscribe to notifications from the "dpapi" event ring.

Agents, such as the Data Plane API, can use interactive-but-silent mode, which has the same prompt semantics but doesn't flood the response path with prompts. The prompt command has the options of "n" (non-interactive mode), "i" (interactive mode), and "p" (prompt). Entering the worker from the master with @@ applies to same mode in the worker as present in the master, making it seamless for human users and agents, such as the Data Plane API.

Usability

HAProxy 3.2 adds usability improvements that reduce time searching for system capabilities, enhance observability, and ensure more predictable behavior when synchronizing data across peers:

Calling

haproxy -vvnow lists the system's support for optional QUIC optimizations (socket-owner, GSO).An update to how stats are represented in the underlying code means that when we add a statistic, it will become available on the HAProxy Prometheus exporter page too, solving the challenge of keeping our list of Prometheus metrics up to date.

A new event ring called "dpapi" now exists for HAProxy to pass messages to the Data Plane API. It's initially for relaying messages related to the ACME protocol, but in the future will notify the Data Plane API of other, important events.

A problem where stick table peers would learn entries from peer load balancers after the locally configured expiration had passed was causing bad entries that were difficult to remove. This, has been fixed. Now the expiration date is checked and the locally configured value serves as a bound.

A new global directive

dns-accept-familytakes a combinations of three, possible values: ipv4, ipv6, and auto. It allows you to disable IPv4 or IPv6 DNS resolution process-wide, or use auto to check for IPv6 connectivity at boot time and periodically (every 30 seconds), which will determine whether to enable IPv6 resolution.New global directives,

tune.notsent-lowat.clientandtune.notsent-lowat.server, allow you to limit the amount of kernel-side socket buffers to the strict minimum required by HAProxy and for the non-acknowledged bytes, lowering memory consumption.A new global directive

tune.glitches.kill.cpu-usagetakes a number between 0 and 100 to indicate the minimum CPU usage at which HAProxy should begin to kill connections showing too many protocol glitches. In other words, kill connections that have reached the glitches-threshold limit, once the server gets too busy. The default is 0, where a connection reaching the threshold will be killed automatically, regardless of CPU usage. Consider setting this directive to twice the normally observed CPU usage, or the normal usage plus half the idle one. This setting requires that you also settune.h2.fe.glitches-thresholdortune.quic.frontend.glitches-threshold.Empty arguments in the configuration file will now trigger a warning, addressing the condition where arguments following an empty one would have been skipped due to HAProxy interpreting it as the end of the line. This also applies to empty environment variables enclosed in double quotes, although you can still have empty environment variables by using the ${NAME[*]} syntax. In the next version, it will be an error to have an empty argument.

When setting the retry-on directive to define which error conditions will trigger retrying a failed request to a backend server, you can now add receiving HTTP status 421 (Misdirected Request) from the server. When a server returns this response, it means that the server isn't able to produce a response for the given request. HTTP status 421 was introduced in HTTP/2. This will ensure more reliable handling of traffic by retrying requests that were routed to the wrong server.

Fetch methods

New fetch methods added in this release expand HAProxy’s ability to inspect and react to client and connection information.

Fetch method | Description |

| Returns true if the transfer was performed via a reused backend connection. |

| Returns the binary form of the list of symmetric cipher options supported by the client as reported in the TLS ClientHello. |

| Returns the binary format of the list of cryptographic parameters for key exchange supported by the client as reported in the TLS ClientHello. |

| Returns the binary form of the list of signature algorithms supported by the client as reported in the TLS ClientHello. |

| Returns the binary form of the list of groups supported by the client as reported in the TLS ClientHello and used for key exchange, which can include both elliptic and non-elliptic key exchange. |

| Returns the key used to match the currently tracked counter. |

| Clears the General Purpose Counter at index <idx> of the array and returns its previous value. |

| Increments the General Purpose Counter at index <idx> of the array and returns its new value. |

Updates to fetch methods include:

The

accept_dateandrequest_datefetch methods now fall back to using the session's date if not otherwise set, which can happen when logging SSL handshake errors that occur prior to creating a stream.

Converters

Aleandro Prudenzano of Doyensec and Edoardo Geraci of Codean Labs found a risk of buffer overflow when using the regsub converter to replace patterns multiple times at once (multi-reference) with longer patterns. Although the risk is low, it has been fixed. CVE-2025-32464 was filed for this. It affects all versions and so the fix will be backported.

Developers

When you build HAProxy with the flag -DDEBUG_UNIT, you can set the -U flag to the name of a function to be called after the configuration has been parsed, to run a unit test. Also, a new build target unit-tests runs these tests.

There's also the -DDEBUG_THREAD flag that shows which locks are still held, with more verbose and accurate backtraces.

Lua

This release includes changes to HAProxy's Lua integration that make it easier to work with ACL and Map files, booleans, HTTP/2 debugging, and TCP-based services.

patref class

The new patref class gives you a way to modify ACL and Map files from your Lua code and is an improvement over the older core.add_acl function. It makes it easier to dynamically change Map and ACL files from your Lua code, such as to build modules that cache responses only for URLs that have a certain URL parameter attached to them.

After getting a reference to an existing ACL or Map file, you can add or remove patterns from it. A simple example follows where we use patref to add the currently request URL path to a list of URLs in an ACL file:

| function add_path(txn) | |

| local acl_file = os.getenv("ACL_FILE") | |

| if acl_file == nil then | |

| core.Warning("Environment variable 'ACL_FILE' not set!") | |

| return | |

| end | |

| local patref = core.get_patref(acl_file) | |

| if patref ~= nil then | |

| local path = txn.f:path() | |

| patref:add(path) | |

| core.Info("Added to list of patterns: " .. path) | |

| end | |

| end | |

| -- Register the action with HAProxy | |

| core.register_action("add-path", {"http-req"}, add_path, 0) |

In this example Lua file, we invoke core.get_patref to get a reference to an ACL file, the name of which comes from an environment variable. The patref:add function adds the requested path to the file.

Your HAProxy configuration would look like this:

| global | |

| log stdout format raw local0 | |

| stats socket ipv4@*:9999 level admin | |

| lua-load acl-patterns.lua | |

| setenv ACL_FILE virt@cached_paths.txt | |

| defaults | |

| mode http | |

| log global | |

| timeout connect 5s | |

| timeout server 5s | |

| timeout client 5s | |

| cache mycache | |

| total-max-size 4 | |

| max-object-size 10000 | |

| max-age 300 | |

| process-vary on | |

| frontend fe_main | |

| bind :80 | |

| # cache responses that match paths in ACL file | |

| filter cache mycache | |

| acl is_cached_path path -i -m beg -f virt@cached_paths.txt | |

| http-request cache-use mycache if is_cached_path | |

| http-response cache-store mycache | |

| # Add the request path to the ACL file | |

| # if 'cacheit' URL param exists | |

| http-request lua.add-path if { url_param(cacheit) -m found } | |

| default_backend webservers | |

| backend webservers | |

| balance roundrobin | |

| server web1 172.16.0.11:8080 check maxconn 30 | |

| server web2 172.16.0.12:8080 check maxconn 30 |

In this example:

In the global section, we load the Lua file with

lua-loadand set the environment variableACL_FILE.In the frontend, we use

http-request lua.add-pathto invoke the Lua function that adds the currently requested URL path to the ACL file. This line has anifstatement so that the Lua function is called only when a URL parameter namedcacheitis present.

The patref class offers other features too:

Manipulate both ACL and Map files.

For Map files, replace the values of matching keys.

Add new patterns via bulk entry with the

patref.add_bulkfunction.Use

prepare()andcommit()functions to replace the entire ACL file at once with a new set of data.Subscribe to events related to manipulating pattern files with callback functions.

Corrected boolean return types

A new global directive, tune.lua.bool-sample-conversion, allows you to opt in to proper handling of booleans returned by HAProxy fetch methods. The default behavior has been that when the Lua code calls a fetch method that returns a boolean, that return value is converted to an integer 0 or 1. Setting the new global directive to normal enables the correct behavior of treating booleans as booleans. This fix helps prevent confusion and potential bugs, making sure that your configuration works consistently and as intended. While it is a small change, it can make a big difference when it comes to debugging and keeping HAProxy running smoothly.

You'll get a warning if you set tune.lua.bool-sample-conversion after a lua-load, informing you that the directive has been ignored, since it really should go before loading the Lua file.

HTTP/2 tracer

A Lua-based HTTP/2 tracer h2-tracer.lua can now be found in the git repository under dev/h2. The HTTP/2 tracer tool gives you a closer look at HTTP/2 traffic, making it easier for users to spot issues with client-server communication. By logging HTTP/2 frames, this feature makes troubleshooting and fine-tuning your setup easier.

Download the h2-tracer.lua file to your HAProxy server for an HTTP/2 frame decoder:

Copy the

h2-tracerfile to your server.Add a

lua-loaddirective to the global section of your configuration:

| global | |

| lua-load /etc/haproxy/h2-tracer.lua |

Add a listen section that receives HTTP/2 traffic and passes it on to your true frontend.

| listen h2_sniffer | |

| mode tcp | |

| bind :443 ssl crt /etc/haproxy/certs/example.com/cert.crt alpn h2 | |

| filter lua.h2-tracer | |

| server s1 127.0.0.1:4443 ssl verify none | |

| frontend mysite | |

| bind :4443 ssl crt /etc/haproxy/certs/example.com/cert.crt alpn h2 | |

| use_backend webservers |

Your logs will show the frames exchanged between clients and HAProxy through the TCP proxy. Here's a sample output:

| [001] [SETTINGS sid=0 len=24 (bytes=522)] | |

| [001] [WINDOW_UPDATE sid=0 len=4 (bytes=489)] | |

| [001] [HEADERS PRIORITY+EH+ES sid=1 len=476 (bytes=476)] | |

| [001] | ### res start | |

| [001] | [SETTINGS sid=0 len=18 (bytes=27)] | |

| [001] | [SETTINGS ACK sid=0 len=0 (bytes=0)] | |

| [001] [SETTINGS ACK sid=0 len=0 (bytes=0)] | |

| 127.0.0.1:36502 [08/Apr/2025:14:22:47.499] mysite~ webservers/web1 0/0/1/2/3 200 822 - - ---- 2/1/0/0/0 0/0 "GET https://foo.com/ HTTP/2.0" |

AppletTCP receive timeout

You can write Lua modules that extend HAProxy's features. One way to do that is with the AppletTCP class, which creates a service that receives data from clients over a TCP stream and returns a response without forwarding the data to any backend server. In this latest version, the receive function accepts a timeout parameter to limit how long it will wait for data from the client. This makes it easier to design services that take in varying lengths of data, such as interactive utilities that read user input, as opposed to expecting fixed-length data.

New functions

New Lua functions were added:

Function | Description |

AppletTCP.try_receive | Reads available data from the TCP stream and returns immediately. |

core.wait | Wait for an event to wake the task. It takes an optional delay after which it will awake even if no event fired. |

HTTPMessage.set_body_len | Changes the expected payload length of the HTTP message. |

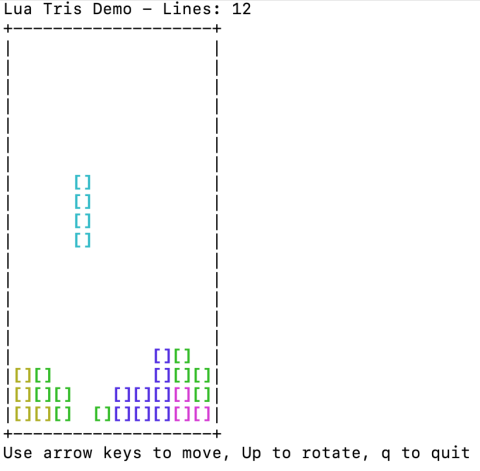

Interactive Lua Scripts

Would you like to play a game of falling blocks? Now, within the HAProxy source code is an example Lua script you can load with HAProxy to play a falling blocks game in your terminal!

But why?

HAProxy's built-in Lua interpreter enables you to extend the functionality of HAProxy with custom Lua scripts. You could use a custom script to execute background tasks, fetch content, implement custom web services, and more.

This game serves as a fun demonstration of the capability for writing interactive Lua scripts for execution by HAProxy. Unlike a script that runs in the background, where you as the client interact with HAProxy which then executes the script, you interact with it directly as a client (by playing the game) and are served content in response (updates to the game state) in real time. You could extend this idea to other practical applications, such as monitoring utilities like top, for example, that serve you continuous updates upon connection.

Version 3.2 of HAProxy addresses limitations of the Lua API and the HAProxy Runtime API that came to light during the development of this interactive Lua script. Included in these changes are some new functions that better facilitate writing non-blocking programs and the AppletTCP:receive() function now supports an optional timeout. Passing a timeout allows the function to return after a maximum wait time to let the script continue to process regular tasks such as collecting new metrics, refreshing a screen, or as is the case with this game, making a block move down one more line on the screen. A top-like utility would typically use this to refresh the screen with new metrics on some interval.

This example Lua script represents another concept gaining traction in software development today: using AI tools to help write code. As HAProxy's documentation, source code, and examples are public, AI tools can leverage them to help you build custom Lua scripts that you can use with HAProxy. In this case, it was a game that AI helped create to show the possibilities, but you could ask AI tools to help you implement practical features as well.

Want to play the game? You can deploy an instance of HAProxy with the game script using Docker:

Download the Lua script here: /haproxy/haproxy/blob/master/examples/lua/trisdemo.lua

Create a file named haproxy.cfg and paste into it the following:

| global | |

| default-path config | |

| tune.lua.bool-sample-conversion normal | |

| # load all games here | |

| lua-load trisdemo.lua | |

| defaults | |

| timeout client 1h | |

| # map one TCP port to each game | |

| .notice 'use "socat TCP-CONNECT:0:7001 STDIO,raw,echo=0" to start playing' | |

| frontend trisdemo | |

| bind :7001 | |

| tcp-request content use-service lua.trisdemo |

In the same directory as those files, run the haproxytech/haproxy-alpine:3.2 image with Docker. This command will expose port 7001 on the container through which you will connect and play the game. This command mounts the current directory as a volume in the container, which will allow HAProxy to load the config file and the Lua script.

| docker run -d --name haproxy -v $(pwd):/usr/local/etc/haproxy:ro -p 7001:7001 haproxytech/haproxy-alpine:3.2 |

Use

socatto connect to the frontend trisdemo on port 7001.

| socat TCP-CONNECT:0:7001 STDIO,raw,echo=0 |

This frontend uses the tcp-request directive with the content option and the use-service action to respond to your request by executing the Lua script, which is a TCP service. The connection remains open while the game plays, with the script receiving your input and responding with the game. Enter q to end the game.

Conclusion

HAProxy 3.2 is a step forward in performance, security, and observability. Whether you’re aiming for more efficient resource usage, simpler management, or faster issue resolution, HAProxy 3.2 has the tools to get you there. This is great news for organizations of all sizes, which will benefit from lower operational costs, increased operational efficiency, and more reliable services.

If you love HAProxy and want the ultimate HAProxy experience with next-gen security with multi-cloud management and observability, contact us for a demo of HAProxy One, the world’s fastest application delivery and security platform.

As with every release, it wouldn’t have been possible without the HAProxy community. Your feedback, contributions, and passion continue to shape the future of HAProxy. So, thank you!

Ready to upgrade or make the move to HAProxy? Now’s the best time to get started.

Additional contributors: Nick Ramirez, Ashley Morris, Daniel Skrba

Subscribe to our blog. Get the latest release updates, tutorials, and deep-dives from HAProxy experts.