History is everywhere at Dartmouth College, and while the campus is steeped in tradition, its IT infrastructure can’t afford to get stuck in the past. In an institution where world-class research and undergraduate studies intersect, technology must be fast, invisible, and – above all – reliable.

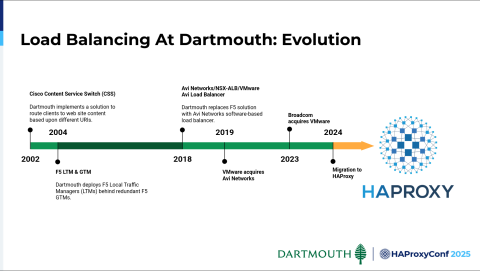

That reliability was put to the test when Dartmouth’s load balancing vendor was acquired twice in five years, as Avi Networks moved to VMware and VMware moved to Broadcom. Speaking at HAProxyConf 2025, Dartmouth infrastructure engineers Curt David Barthel and Kevin Doerr described how they began to see what they called “rising license costs without apparent value, and declining vendor support subsequent to acquisition after acquisition.”

It was clear that they were beginning to pay more for less — and it was time for a change.

After conducting thorough research, interviews, and demonstrations, Dartmouth settled on the best path forward: HAProxy One, the world’s fastest application delivery and security platform.

For Dartmouth, it wasn’t just a migration; it was an opportunity to innovate on its existing infrastructure. They leveraged the platform’s deep observability and automation to architect a custom Load Balancing as a Service (LBaaS) solution.

Today, that platform is fully automated and self-service, making life easier for 50+ users across various departments and functions. Dartmouth’s journey serves as a technical blueprint for those hoping to make the switch from Avi to HAProxy One.

Was history repeating itself?

As an undergraduate at Dartmouth, you’re likely to be taught that history doesn’t repeat itself — but sometimes it rhymes.

Infrastructure changes were not new to the Dartmouth IT team. For roughly 20 years, the team managed its infrastructure using F5 Global and Local Traffic Managers. Later, they layered a software load balancing solution from Avi Networks on top of their F5 environment.

However, the landscape shifted as Avi was acquired by VMware, which was subsequently acquired by Broadcom. The changes led to rising licensing costs and declining vendor support. The solution began to feel like a closed ecosystem, forcing Dartmouth into a state of vendor lock-in that limited its architectural freedom.

Ultimately, the team identified three "deal-breakers" that made their legacy environment unsustainable:

Vendor lock-in: Today’s multi-cloud and hybrid cloud environments demand a platform-agnostic infrastructure. Yet, Dartmouth’s existing software was moving in the opposite direction — becoming increasingly tied to a specific vendor's ecosystem (VMware).

Rising costs & constrained scaling: The licensing model was no longer aligned with Dartmouth’s needs. Increases in traffic often triggered disproportionately high costs, while complex licensing tiers made it difficult for the team to scale or innovate creatively.

Automation roadblocks: To provide true "Load Balancing as a Service," the team needed a robust, template-driven workflow. The existing API didn't support the level of deep automation and auditability required to offer users a truly self-service experience.

Meeting new criteria

The Dartmouth team followed a dictum from the famous UCLA basketball coach, John Wooden: “Be quick — but don’t hurry.”

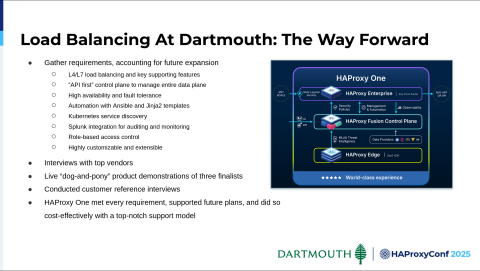

They had established a high level of service for its users, and they wanted to maintain and also improve on that. So they set out their requirements carefully, including:

Comprehensive load balancing: Robust support for both L4 and L7 traffic.

API-first control plane: A solution that offers total data plane management through a modern, programmable interface.

Deep automation: Built-in features to support a GitOps-style workflow.

Modern orchestration: Native service discovery for Kubernetes environments.

Extensibility: The ability to customize and extend the platform to meet unique institutional needs.

To find the right partner, Dartmouth conducted an extensive evaluation of top vendors where they demonstrated their products, along with customer reference interviews. HAProxy stood out for “less grandiose marketing” and the ability to run on-premises, in addition to cloud native implementation.

HAProxy One met every current requirement and supported future plans. The platform was found to be cost-effective and to feature excellent support.

"We interviewed many vendors, and HAProxy came out on top, particularly with the top-notch support model. It's beyond remarkable — it's unparalleled. Having that wealth of expertise is absolutely invaluable."

Building Rome in a few days

To replace their legacy environment, the Dartmouth team didn't just install new software; they engineered a robust, automated platform.

The deployment was centered around HAProxy Fusion Control Plane, integrating essential networking components like IP address management (IPAM), global server load balancing (GSLB), and the virtual router redundancy protocol (VRRP). To maintain consistency with their existing operations, they also implemented custom TCP and HTTP log formats using the common log format (CLF).

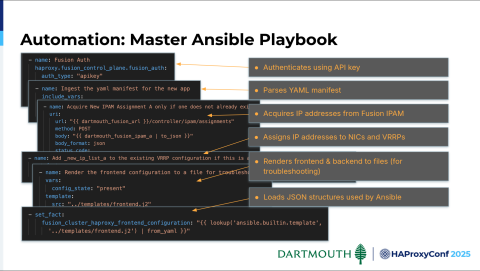

The team then worked with their existing configuration manifests, in YAML format, which are sent to a Git repo to specify each user’s configuration options. This is all driven by a master Ansible playbook.

At the heart of this new system is a GitOps-driven workflow that makes infrastructure changes nearly invisible to the end user. The process follows a highly structured pipeline:

User input: Power users submit their requirements through a simple, standardized front end.

Manifest creation: These requirements are captured in YAML-formatted configuration manifests and committed to a Git repository.

Automation pipeline: Each commit triggers a Jenkins pipeline that launches a master Ansible playbook.

Configuration generation: Ansible uses Jinja2 templates to transform the YAML data into a valid, human-readable HAProxy configuration file.

Centralized deployment: The playbook authenticates to the HAProxy Fusion Control Plane via API and pushes the configuration to HAProxy Fusion as a single, centralized update.

Data plane synchronization: HAProxy Fusion then distributes and synchronizes the configuration across the entire fleet of HAProxy Enterprise data plane nodes, ensuring consistent, high-availability deployment at scale.

This modular approach provides Dartmouth with a "plug-and-play" level of flexibility. While the team is not deploying a web application firewall (WAF) at go-live, the framework is already in place to support it. When they are ready to activate the HAProxy Enterprise WAF, the process will be streamlined. Once the initial migration is complete, adding security layers will be as simple as activating a pre-tested template.

Observability without complexity

A big win for the IT team was the clear separation of responsibilities. Users are granted read-only access to HAProxy Fusion, allowing them to track the status of their requests and view their specific configurations in real time. Meanwhile, the IT team retains central control over the control plane, ensuring security and stability across the entire institution.

With every configuration change fully logged and auditable, troubleshooting has shifted from a manual "guessing game" to a data-driven process. Combined with HAProxy’s highly responsive support, Dartmouth now has a load-balancing environment that is not only faster and more cost-effective but significantly easier to manage.

Keys to the new city

Sometimes it’s seemingly small things that turn out to be crucial to success. What made Dartmouth’s transition to HAProxy work so well?

The team manages more than 1,100 load balancer manifests, all of which were confirmed and validated against the new automation framework well before “go-live.” Specific “power” users were trained to use the HAProxy Fusion GUI, preparing them in advance for system deployment.

The old architecture and the new one have been run side-by-side, so migration only requires a simple CNAME switch. If issues arise, users can fall back to the previous implementation, and behavior between the two systems can be easily compared in a real, “live fire” environment.

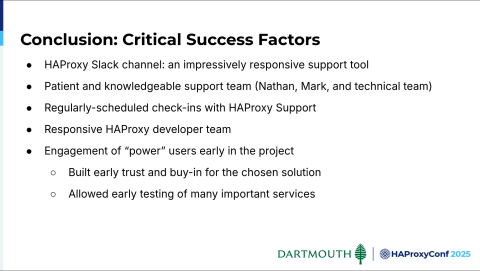

The team cited several critical success factors, including:

The HAProxy Slack channel for support, with unparalleled responsiveness and a highly capable team

A developer team at HAProxy that is consistently available and responsive

Power user engagement and trust through early testing and implementation

Every feature from the Avi environment has now been implemented on HAProxy One — and in the process, Dartmouth has been able to introduce new capabilities that didn’t exist before. The response to date has been very strong. Power users say, “This looks great. This is much better than what we used to have.”

Ultimately, Dartmouth didn’t just swap vendors; they built a platform that puts them back in control. By prioritizing automation and architectural freedom, the team has moved past the cycle of rising costs and closed ecosystems. They now have a high-performance, self-service environment that is reliable, cost-effective, and ready to scale whenever they are.

Subscribe to our blog. Get the latest release updates, tutorials, and deep-dives from HAProxy experts.