What's the purpose of having a career where you have 20 years of one-year experience? In an industry increasingly dominated by automation and "best practices," distinguished engineer Kelsey Hightower challenges developers and system administrators to rediscover the fundamentals that make great technologists truly creative and effective.

Speaking at HAProxyConf, Hightower shares hard-won lessons from his 25-year career, from debugging deployment scripts at financial institutions to becoming a distinguished engineer at Google. Through personal stories and live demonstrations, he explores how understanding core principles—rather than chasing the latest trends—enables engineers to solve real problems and build lasting solutions. This session covers everything from modernizing legacy Fortran applications to the philosophy behind sustainable open source projects, all while demonstrating how tools like HAProxy succeed because they focus on fundamentals rather than feature bloat.

This comprehensive talk will teach you how to evaluate new technologies critically, understand when to modernize versus rewrite legacy systems, and to develop the deep technical knowledge that separates truly skilled engineers from those who simply follow instructions. Whether you're just starting your career or looking to advance beyond senior levels, Hightower's insights offer a roadmap for building expertise that remains valuable regardless of which frameworks come and go.

Today, we’re going to talk about the fundamentals.

Some people here are probably starting out their careers. And if I had advice for you, there’s only two things you’ve got to worry about in this industry:

Does it work…or not? If it works, then you ain’t got nothing to worry about. If it doesn’t work, then you’ve only got two things to worry about.

Can you fix it…or not? If you can fix it, then you ain’t got nothing to worry about. If you can’t, then you’ve only got two things to worry about.

Can someone else fix it…or not? If somebody else can fix it, then you ain't got nothing to worry about. If not, you’ve only got two things to worry about:

Can you get another job …or not?

And I think we've gotten to the point where people have forgotten the fundamentals. I've seen people approach this work and they have no idea why they're doing what they're doing. They're just assigned the Jira ticket, and off they go like little robots. What is the purpose of having a career where you have 20 years of one-year experience? The ability to imagine is gone. Since you don't know how it works fundamentally, you have no idea how to reshape a thing you don't understand.

I think that's a huge problem when it comes to the technology landscape. Just download the software, hope it works, and if it doesn't, you call the vendor. It's no surprise that it's become easier to automate you out of a job because you're not doing much anymore.

This is a serious problem in our industry, and I think it's rooted in those fundamentals. The people who understand the fundamentals tend to be the most creative because they can see the low-level details. They can rearrange things to match whatever they need at that given moment, and they don't have to wait until a proven solution is available.

I see people say, "Hey, what's the best practice?" And I tell them, "just practice". Why do you want to be like everybody else? It's not the job.

I'm going to jump to my laptop. We'll put my screen on here. I'm going to talk about how I've trained my own mental model. All of you are these wonderful, individual, unique things. You're people. You're humans. And you all have developed your own worldview of technology and your model. So, I'm going to go to my laptop.

I remember my first job. I thought it was one of those serious jobs where you had to wear collared shirts and slacks to work. And I was like, "Oh, this is what real IT is about." Somehow, the collared shirt made you type faster.

I remember they taught me how to deploy—like, "Kelsey, we're going to teach you everything you need to know to deploy enterprise software." So, how is this different from other software? "Kelsey… it's the enterprise."

We have this long spreadsheet called "change management." We go line by line and decide: do we deploy the same software we've been deploying for 20 years to the same servers, or not? I was like, fascinating.

I got on the jump box, and they have these instructions. Have you ever seen them? It's like the build guide. They tell you step-by-step what to do. And every time people follow these steps, it's always this pseudo-emergency. They start this phone call with all the executives in case it goes down. The PMs, the InfoSec people, and the support people are all ready to see if the thing we do over and over again will work this time.

So, it's my turn. I'm new. And I jump on the jump box, and they're like, "All right, Kelsey, just run the deployment script." I'm like, oh, this is easy. Like, how hard can this be? So I copied and pasted directly from the build guide—no thinking required.

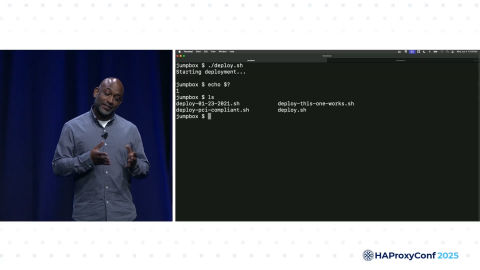

./deploy.sh.

And you're like, this is it. It's got to work. Starting deployments is, like, the easiest job ever.

Then it doesn't do anything. The UX for our tools is pretty bad these days. You just run these automated deployments, and somehow they should magically work. Does anyone know if this is working? How could you tell? Has anyone written scripts like this? Don't laugh. Half the room is like, damn it.

And so it finished. First week on the job and I'm like, yes! And then I echo $?. And it says 1. Now I'm a little nervous. Because everything I've been taught, zero means good. Anything that's not zero means you have a problem. And so I do what any IT professional would do.

I ask the person in the next cubicle, "Hey, you ever get an error with the deployment script?"

And without even looking at me, he's like, "Which one did you run?"

I said, "deploy.sh". What other one?

He's like, "Oh, no, that's the wrong one."

And then I run "ls". I'm like, what the hell is this?

Now, I'm working in financial services, so I'm thinking that the PCI-compliant one looks really attractive. The other one has the date on it, so that one might be important. But obviously, it's got to be this one: deploy-this-one-works.sh. All right? This has got to be it. Now, we shouldn't update the documentation because maybe we have mapped this one to the one that people should run by default.

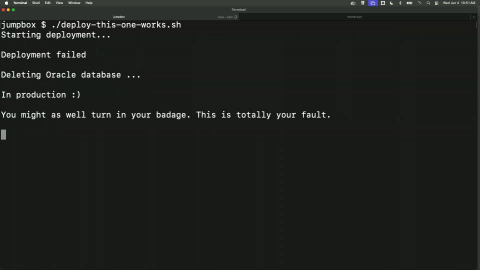

So we run this one. And so, I'm sitting there like, OK, this has got to be it. It's making a little bit of progress. I'm starting the same deployment. I'm thinking to myself like, oh, at least it told me it failed this time, which matches the last time.

And I'm like, oh! No, no, no, no, no, no, no! I'm thinking to myself, this is totally not my fault. I didn't write the script!

And this is literally how many of our tools work because, again, I think people forget the fundamentals of the job. These are different concerns. Sometimes they get bundled into these big packages only to be broken up again. We try to give these fancy result messages. But again, I think a lot of times when you go into these processes blind, this is what you end up with.

There's this thing that we've been doing for so long. There's this group therapy that we started in the industry. You may have heard of it: DevOps—this group therapy between developers and system administrators where we try to resolve these differences.

And then to better understand the fundamentals. I remember one fundamental around “building really good tools that matter”, but I had to understand the actual apps and how they actually worked. Remember, I worked at this financial institution, and they're not necessarily using modern programming languages. Things like COBOL and Fortran are things that they use.

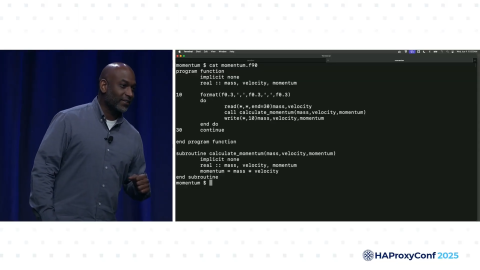

And I'm just going to show you a simple example. Can anyone tell me what this code does? Now, there are people in the audience with more gray hair than me. They're really excited right now. Does anyone know what language this is in? Fortran. I know you're like, “Oh, Fortran's making a comeback!” No. It is not making a comeback.

But what this does is really simple. It pulls from stdin three values. What this function does is multiply mass times velocity to get momentum. It reads in those variables, then calls this function, the subroutine below, called calculate_momentum(), does the math, yields the results, and then writes it back to stdout.

This is what people were doing a long time ago. And all the people are like, "Oh, modern infrastructure, modern applications. Kelsey, you don't understand. This is new, innovative stuff." Let me guess what your company is doing: you take data in, do something in the middle, and write it to a database. "Oh, how did you know?" That's what you all are doing!

Most of these fundamentals revolve around that. There's data, and then there's algorithms, and you combine them in unique ways. Yes, there's UX involved, how you gather that data—web browser, mobile device, using your voice, natural language. But at the end of the day, there is still data being serialized from that.

Some would argue that most human activities, whether you're buying something at a retailer or taking some action on your phone, yield a data set that will then be processed to some functionality. It's the whole purpose of computing, by the way. Without the data, it just wouldn't matter.

To run this, we need some input. Again, I'm teaching myself the fundamentals. So there's data here. This is a very simple CSV file with two values. We start with 10 and 35. It's like, how do you make this work? Well, you can compile the Fortran code. So, this is my modern Mac. Surprisingly, this stuff still builds, like, decades-old software still compiles.

I'm going to build Fortran with my little shell script, and now I have this Fortran binary. And so there's this binary on the server called momentum, and what I can do is cat the input file, pipe it to this thing called momentum, and it calculates all the values.

Most software that I've seen in my 25-year career, from Google to this small financial services company, involves something very similar. I know you all complicate it all with different formats and message queues and different types of databases and programming languages, but it is fundamentally down to this. And those that understand these fundamentals do really unique things to make things work. They build really cool data pipelines. They can manipulate any protocol and translate it to another, kind of like this whole HAProxy thing. It has that same kind of lineage.

When we look at software like this, sometimes we make a mistake. We call this "legacy software". Anyone ever use that term? Legacy software. I don't know about you all, but you're going to get old too. And you would hate for someone to refer to you in the manner that we refer to some of our technology stacks. And some of you have this innate fear of hiring your future selves.

Usually in life, when you do something really well for a really long time, you become legendary. They make statues of some of these people. They go into the Hall of Fame.

And so, being good at something for a really long time is a badge of honor. And that's one of the things that made me excited about the HAProxy team is that they've been around for a long time.

There's another fundamental I learned during this time, which was the one around this part where you grow as a human being. Most of us strive in our careers to be like senior engineers. I was very lucky. I became a Distinguished Engineer at Google—very prestigious situation given that for a couple hundred thousand employees, only a hundred or something are regarded as Distinguished Engineers.

I get a lot of questions about, how do you become a Distinguished Engineer? I always try to warn those people: you've got to be careful. You don't want to spend your whole career chasing becoming a Senior or Distinguished Engineer and remaining a junior human being.

Once I learned the fundamentals, that company had this problem in production. We cut over some of the apps from the mainframe and moved them into the new modern Java stack at the time. No more DB2. Now we're running on Oracle.

And I remember we cut over, and we were using a particular, popular load balancer at the time, and it was using so much memory per request. If you've ever had to process credit card transactions, you know you get seven milliseconds. If you don't provide a response, companies like Visa will make a response for you: either default-decline or default-allow. And then they give you a penalty whenever they have to stand in for the request.

I'm sitting here, a pretty junior engineer, maybe mid-career, and I bet my whole reputation that I could fix it. I'm in the corner on my laptop, figuring out how to swap out the popular proxy at the time for this little small one, this little "HAProxy" thing. It didn't have all the features. Turns out, we didn't need them all. All we needed was SSL termination, and we needed to have as little memory usage per request as possible.

I would watch the change window go by where the team would just fail. We would cut over to the new system. Transactions would come in. The popular load balancer would die. And we would do a new change window starting at midnight. I remember the CTO being frustrated every change window because it didn't work, and we had to flip back to the mainframe.

It gets embarrassing when you have to send letters to all your customers telling them that the new platform doesn't work. And we can't tell them specifics. "The load balancer can't handle the same amount of requests as the old load balancer was doing. We thought this was supposed to be the modern stack. Turns out, it can't even handle the existing platform that we have."

And so I'm suddenly in a corner, staying at the ready. I got this little configuration file, which probably has 25 lines. I stripped out all the cruft because all we really needed was to terminate and send to a backend. That's it. But it needed to be as performant as possible. I got told “no” for probably two weeks. "New technology, we're not sure about it, something, something security, something, something PCI." And I'm just waiting, and they're failing.

One day, I bet my career and said, "Hey, listen, if it doesn't work, fire me. But I think I can make it work. But, you're going to have to let me swap it out and give me as many restarts as possible."

I come in at midnight, start the binary side by side, and immediately forget that you can't have two things binding on port 443. I debug that while sweating. Shut it down. Bring down the other one. Restart this one quickly. We're binding it to the port.

Have you ever been in one of those war room scenarios? You know, the kind where people have assigned seats and get super locked into what's happening. And all we're doing is watching the memory footprint hold steady as we ramp up more and more traffic on the other side.

At that point, at that moment, is when I kind of landed the plane. There's launches and landings. A lot of people have good ideas. They launch things, but they don't land them. I landed that idea into production, and it held.

For the next three days, we're all just looking at this one metric, memory usage, because we know if it hits that threshold, it's going to fall over, and we're going to have to migrate again. But we didn't have to.

I think that's when I earned my technologist stripes. It wasn't that I was able to explore new technology, it was that I was able to curate it for the specific use case at hand. And I understood what it meant to put my reputation on the line.

So, a lot of times, we talk about new technologies, but we don't talk about the nuance of, do you know how to get into production? Do you understand what it takes to get this stuff to fit well with the existing stack? Most people don't. They never get that far.

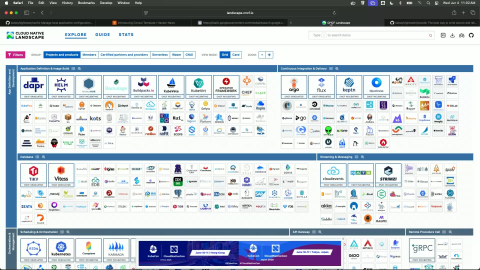

Now, once I really get confident at this, I look at the user experience of some of these things. And if someone asked me, "Hey, Kelsey, how would you modernize something like your Fortran app over there?"— I know a lot of you would just go online and start looking for stuff like the CNCF landscape. And some of you all are, like, playing bingo with this thing, like, "Oh, I got some Prometheus, Kubernetes, Argo." Some of you have already done this. This is why you're laughing.

And you realize that. You pull in 12 of these projects when you only need one. If only you had a little bit of time to think about what you're actually doing, the fundamentals of it all.

I watch people build these huge Rube Goldberg machines and then quit. They just leave. Why? Because they can never integrate it into production. They can do it for the net-new side of things, but they can't make it work for the thing that actually makes the company money. That's the hard part. That's the engineering part, not the science experiment part.

So, you go through all of these logos, and I would say, “Well, look, maybe the first step is we make an HTTP wrapper”, and then we containerize it so we can deploy it on the VM, Kubernetes, Heroku, doesn't matter. But we don't necessarily need to bring in all of these things just to provide an HTTP interface to this Fortran app, right? And we don't need to rewrite the Fortran app, necessarily. There are ways that we can just wrap it. And I'm going to show you the code because I think people often overthink what's required for this.

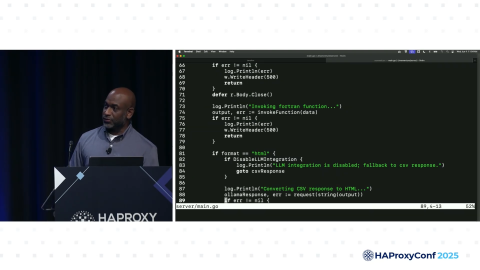

I wrote a little Go shim. If you look at this server, we'll just look at some of the code. It's really simple. I even have LLM integration, but it's disabled by default. This allows me to format different outputs. So instead of just CSV, it can output JSON or something else. But that's not the important part.

The important part is that I just start a little HTTP server. I don't add a lot of functionality, because I'm a big proponent of reusing things like HAProxy in front of it instead of rewriting everything. I added a few health checks just to make sure that it works. All it does is write, "it works." And then I just have a little function called calculate().

This calculate() function receives a CSV file, parses it in CSV, and then shells out to the Fortran application. It just invokes that function—it's really simple. It invokes that function with the same stdin that I would have done on the command line. This is like a REST API.

I remember there's a lot of companies spending so much time like, "Oh, we're going to have APIs for all our apps." I'm like, okay, data in, process it, data out, maybe in a different format. But it's these 80, 90 lines of code that, if you actually know what you're doing, it's typically all you need to modernize things. Do you need to rewrite the Fortran into REST? Only if you care about your LinkedIn profile, not in reality.

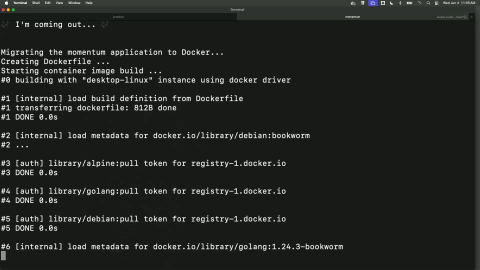

Now to put this all together. I wanted to make it super easy for my team to deal with this, so I made this little tool called "Diana". Diana tries to migrate these Fortran apps with this little wrapper and then maybe containerize them. But you want these things to be fun to use. They shouldn't be boring, folks. You've got to spice it up a little bit. Be creative. What can you do?

So, I wrote this little tool called "Diana". What Diana does is, you know, there's this thing where we do documentation. Your apps can tell people how to use them, maybe give them examples. Here, you just point to the application. In this case, I default to momentum, and run it. So, let's do this.

[The Diana application plays a recording of "I'm Coming Out", sung by Diana Ross]

What, y'all don't have music for your command line tools? You can clap.

But I do want you to take a moment to understand that feeling. When you're showing people technology, you want them to feel like that, at least the first time. I like infrastructure to be boring, but not while I'm working on it. Not when I'm crafting the individual Lego blocks to show people what the art of the possible is.

In this case, this is a very simple situation where I've modernized this application. It doesn't take six months. It doesn't take nine months. It takes understanding the fundamentals in order to do this. I understand the fundamentals of the building blocks here, so it's not hard for me to craft such a tool that works this way. I don't need to create a tool that solves the world's problems. I need to craft a tool that solves my problem.

Now, over time, in my career, I did branch out. I branched out to a world where I wanted to create my own open-source projects and share my ideas.

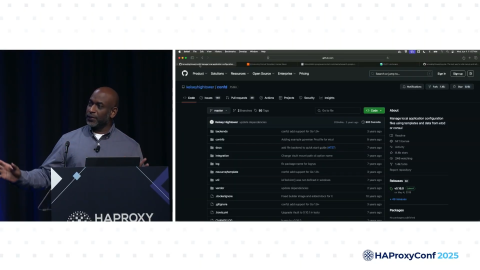

I contributed to a lot—Python, Ruby, GIMS, Puppet (the configuration management tool). But there was this moment when I made my first open-source project. This is my very first project that I started from scratch, called Confd.

I was working at Puppet Labs, and there was this new programming language that came out called Golang. I'm sitting there at the dawn of DevOps. It's around 2012, and I think the whole world will optimize itself around configuration management. And then Go comes out, and then Docker comes out, and then Terraform comes out. And everything I thought to be true, everything that I thought, from a trajectory standpoint, got blown up. I realized I was on a dead-end trajectory. Config management was no longer going to be necessary in the next decade. And so, I jumped ship.

But I knew I needed the fundamentals of config management, so I built this Confd tool. All it does is allow you to build just enough configuration to bootstrap your application. If you are running in a container, how much configuration management do you need?

All I did was set a few keys in something like etcd or Consul or Vault back then. I allowed your application, at runtime, to specify what values it needed, and I generated an output file. That output file would become your configuration file for your application and would restart it. Because in the world of Kubernetes and Docker (though, Kubernetes didn't exist at this time), that's all you needed.

So I had this really popular project, but I made a mistake. It wasn't very extensible. Every time I wanted to add new functionality, I had to add a whole new backend. I wrote most of these by myself. I eventually got some contributors. A couple of the contributors were a guy named Mitchell Hashimoto and Arman from HashiCorp. They had just started the company, and they were starting to build out new tools. Consul came out, and eventually, Vault came out.

And they asked me one day, like, "Hey, Kelsey, we want you to add support for Vault." Back then, I didn't think it was going to be a good idea, so I said no. And what happens is—when you tell the community "no"—they figure out a different way.

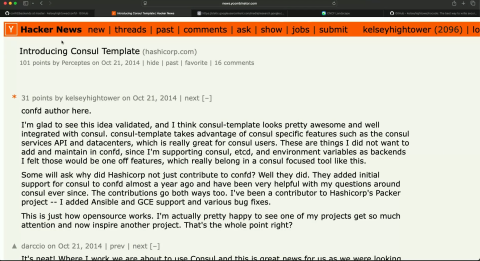

I remember there was this Hacker News thread where HashiCorp announced a competing project called Consul Template. And man, you would swear that all the people on Hacker News were related to me because they were mad at HashiCorp. "How dare you compete with Kelsey Hightower's ConfdD project; you should have just contributed there."

And I had to jump in. I was like:

"Hey, Confd author here. I'm glad to see my idea validated. And I think Consul Template looks pretty awesome and well integrated with Consul. Consul Template takes advantage of Consul-specific features such as Consul services API, et cetera, et cetera.

“Some will ask, why did HashiCorp not just contribute to Confd? Well, they did. They added the initial support for Consul to Confd almost a year ago and have been very helpful around questions ever since, around Consul. But contribution goes both ways. I've contributed to their projects. I've added the first support for Ansible for GCE.

“But this is how open source works."

All ideas don't need to coalesce around a single project. I remember someone telling me this phrase: "some people have ideas, and some ideas have people". I had this idea that configuration management didn't need to be this big, monolithic thing. It could be these little utilities. And I watched for the very first time that one of my ideas outgrew me. And I think that is the fundamentals of open source.

The licensing, pick your favorite. But if we want these projects to exist, you have to be willing to pay for them. As a maintainer of this project for six or seven years, the hardest thing as a maintainer is to think about sustainability. For a long time, I thought these projects were about a marathon, like, I will be running this race forever, and I only needed to learn to pace myself. Now I believe that that's false. It's more of a relay race. You need to think about who you will hand that baton to. You will not want to work on these projects for the rest of your life. And given that, you should be thinking about the successor plan, other maintainers, and who you pass that baton to.

But I do think it's on us to figure out how to support these things. I'm not going into detail about how I feel about open source license changes, but I do know what it's like to try to run your own business. If no one's willing to pay you for the software or the product that you build, then those things won't exist anymore.

And if you're in the audience, and we talked earlier in that opening keynote about ways to contribute, sure, source code is welcome. Sure, documentation is welcome. User studies are welcome because they give confidence to other people in the industry that it's safe to use these things.

But sometimes, it's in your best interest to fund the actual projects.

If you're backed by a company that has an open-source project, maybe you should buy a license. That's our individual responsibility, I believe, if you want to see these projects continue to thrive.

Now, we're going to have a long Q&A period because I'm pretty sure people have lots of questions about lots of things. But one more thing on the fundamentals. One thing that I think we can do as a community is learn how to explain complex topics in a very simple way. But you have to do more than just read the surface of documentation. You need to understand these things.

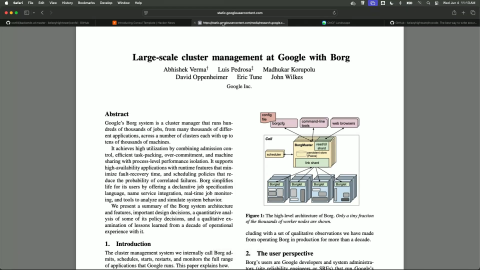

When Kubernetes first came out, there were nice white papers, and some of you pretend, like, y'all be reading white papers. No, you don't. And with these white papers, there's lots of diagrams, sometimes lots of history. And they give you some broad details. And sometimes it goes super deep. But most people can't explain any of these topics in simple terms.

And so when people walk up and say, "Hey, Kelsey, can you explain the value of Kubernetes and bin packing and scheduling?" And I ask myself, hmm, maybe I should walk them through the highlighted sections of this white paper. That doesn't move the needle, folks. Sure, you look smart, but it doesn't make the other person feel smart. You've got to go to that next level, and this is really key to the fundamentals, because this is one thing humans do really well or should be able to do really well.

I remember one time at a conference. I was like, you know what? I'm not going to use my laptop this time. No slides. I'm going to try to explain Kubernetes without mentioning the word Kubernetes. No live demos, nothing. How would you do it?

I was born in the ‘80s, '81 to be exact, and I remember spending a lot of time playing Nintendo. One of my favorite games was Tetris®. We heard earlier today that you can run a Tetris®-like game inside HAProxy. Maybe don't do that, but it's cool that you can!

I was thinking about Kubernetes and how it's different from something like DevOps, for example, and I drank the DevOps Kool-Aid—a lot, right? In the DevOps world, we like this idea of automation and one-click automation, to be exact.

[Kelsey starts a new game]

Oh, that's dope music.

Okay, so here's what one-click automation looks like in real life. People think they're really fancy, like, one-click automation is the end game.

One-click automation looks like this. A lot of people complain about their cloud bill being expensive and wasted resources. This is how you get there—one-click automation. And you walk off like you did something.

This is what most people's environments look like. You have these fixed automation things that don't examine the playing field. They don't account for CPU memory utilization. All they do is just write scripts based on what they know with no feedback loops from the rest of the environments. And so, while it's on autopilot, you'll eventually run out of resources. And then you invent this thing called "auto-scaling", so you can do the most inefficient thing forever or until the money runs out. This is kind of the core of the industry.

And so, people say, "Where are we going next?" The next generation of systems has to have the full feedback loop. So, what does Kubernetes do differently than your deployment bash scripts? I show them one thing that it does differently, and it's like a very simple thing that tends to unlock this in people's minds.

Instead of one-click deployments, assuming everything about the environment, you have to pay attention to the environment itself. The way you pay attention to the environment is you've got to examine every piece. As the pieces come in, you put them in the best-fit scenario. You look at the different pieces, and you line them up. All pieces aren't necessarily the same. The JBoss app may not be the same as the other app.

Some of you are like, “Wow, Kelsey, you can play Tetris® and talk at the same time!” Yes, I can. Or at least I'm trying my best to do that.

When we think about these algorithms and how they fit things together, the goal of our profession is to try to make the best decision you can with the best information that you have. The dope thing about these new systems is when that perfect piece comes in, it could be one of these new ML workload jobs because it's all modern; it's roughly the same. All of them need compute cycles. All of them need storage. All of them need memory. And when that job is finished, you clear out the unneeded jobs.

This is the thing that Kubernetes does so well. Some humans, if you had enough time and enough experience, your scripts would be able to examine the whole world. But this fundamental understanding of ways of simplifying the things that we do—that way other people feel smart. Other people know what the individual building blocks are, so they can be creative. I think that's the cornerstone of our community: learning the fundamentals, so that you too could decide where we go next.

Thank you.

I left plenty of time for Q&A, so we have some on the live stream. There are mic runners here, and we're going to take questions from the audience. I'm going to leave my computer up just in case I can answer something live, but the floor is yours. Any question is fair game.

Q&A

What are the most effective ways to vet new industry trends?

What's the best way to vet new industry trends? If you work at a company that wasn't started yesterday, you have a stack that actually works. And so that stack that you have works if you give it a version number. It's probably on version 14.3.2. And you see a new industry trend that arises, and the first question you've got to ask is: does it provide any value to me?

Let me put this as an example. Let's say you don't have one of the streaming services that doesn't show ads. There's these things called commercials, and they just interrupt the thing you're watching. So, you're sitting there and watching a commercial for a breakthrough medication. It's for people whose arm can fall off at any moment. If they don't take this medication, the arm falls off. And you're sitting there, and you’re like, “Wow, I would really love to be on that prescription!” I don't think you want that prescription.

A lot of times people look at industry trends as if everything applies to them. It doesn't. I think the best way to analyze these things is literally to go into a real-world environment, your real-world environment, and ask: does this new thing provide any value to you?

I met someone, they're like, "Hey, Kelsey, should I be using Kubernetes?"

I'm like, "What do you do?"

He's like, "Oh, my company makes a billion dollars with three servers."

I said, “Listen to me very clearly. Ignore anything to do with containers or Kubernetes. Offers zero value to you. If you make a billion dollars with three servers, SCP is all you need. Call it a day.”

So that would be the way I do it, actually. Just be an engineer. Go through the scientific process, take that thing, see if it provides value, and if it does, no one will have to tell you why, because you will discover it on your own.

What do we have to keep in mind when implementing AI solutions, and do you see any impact with AI solutions in the open source ecosystems?

When I hear a question like that, it's like someone asks, "How do we do Internet solutions?" What's the Internet? All of it? Are we talking BGP? TCP maybe? HTTP/3? What about TLS certificates? Encryption's cool. It's such a broad statement that it's hard to give an answer.

So if I was super bullish, AI changes everything. You should buy my product.

And the unfortunate thing, in my opinion—seriously—is it feels like we've reduced human intelligence to the point of thinking that only what people do with computers is considered intelligence. So, artificial intelligence it is.

When people ask me these questions, I'm like, "What are you currently doing? What's the current process?"

"Oh, well, we have a manual process where we try to analyze sales data from the store."

And I think about that for a moment. Why is it such a manual process? If the receipt has any structure, you can add structure to it, and you can put it in this thing, you ready? It’s called a database. And you put that data in a database and you can run analytics on it.

And then sometimes people want to skip that fundamental step and go to a much more rigid tool like AI that does a really good job of approximating unstructured data. When I think about the use cases around AI, I like to start with, "Show me something only AI could do so I can understand my alternatives."

AI is not a cheap thing to run, like, to do inference, is not the cheapest thing in the world. The amount of electricity, the GPU cycles, not CPU cycles. This is not the cheapest way to return a result. For example, if you wanted to translate CSV to XML, there are libraries that do that with extreme accuracy and for a very low cost. If you don't understand the fundamentals around clock cycles, memory usage, and you try to use an LLM to convert JSON to XML, you are wasting your money. You have to really understand what value you get from it.

The thing I like about AI, if I just wanted to be positive for a second, is this ability to get to a more user-friendly user interface. SQL is not user-friendly for people who don't know SQL at all. So the ability to say, "find the oldest customer in the last 10 years", is an amazing query language because that's how I'm going to write it in the Jira ticket that someone's going to take out and turn into a SQL statement, maybe put it into some business intelligence system and create a dashboard for me. That's a lot of steps when I can compress all of that into a new type of state machine that can take English and provide that as an output. That is phenomenal. That's going to be an amazing building block. But you still need a product for that to sit around. So that's my kind of feedback on that one.

On cycles of fundamentals and MCP

Audience

We talked about this internally recently. And you actually posted about MCP. And somebody was trying to explain MCP. And I internally said, “CORBA’. And you, I think, posted exactly the same on Bluesky. So it kind of reminded me of the fundamentals; do you think it's actually a cycle of the fundamentals? I happen to know some of the people who invented CORBA, and they are probably sitting there saying, “Oh, shit. Like, we did this 30 years ago. And now it's MCP. And maybe we should have made the billions that people are making on the MCP.” So is it a cycle at the same time?

Kelsey

I mean, look, context matters. History is important, and timing matters. Solaris was so ahead on everything—NFS, object store, containerization, Solaris zones—just too early (way too early). And so people may look at Solaris back then in that context as a failure. But then, if you're Docker, you've changed the world.

And so when I think about things like MCP protocol… For those that don't know, you have this LLM. This thing has its own worldview that gets created by this training set. You can ask it questions. It can give you responses based on its cutoff date. We know this. But what happens if you want that thing to interact with your Salesforce instance or your Oracle database? We need a shim that's just computing 101. If you're a developer, if you've written any code in your life and you want to connect to a database, you need a database client. We've known this for decades.

And yes, it can hurt when you watch people say, "Hey, we have this new thing called Model Context Protocol."

And you're like, "Okay, what does it do?"

"You can send an HTTP request and it will call code and retrieve data and send it back. It's going to change everything."

And you're sitting there like, “I don't know. Haven't we been doing this for a long time?” But we haven't been doing it in this context.

I think that’s what makes it exciting. In this world where you had a limitation for the model that didn't have integration points to the existing world, the first one to remind people that this is possible tends to win.

And the nice thing about MCP, honestly, is its simplicity. If you look at it now, I think JSON RPC is a big part of the component. You can read the spec in five minutes. You can curate one using a bash script if you know what you are doing. You can integrate with your existing code base and return the response, and then it just works.

When it comes to things like MCP, I think it's the fact that we took existing tools to solve a new problem that makes it exciting. We already have global consensus on using HTTP as an integration point. And for all the people who just want to move fast, that's the exact solution they needed. We'll teach them about JWT tokens, scope tokens, and security later once they lose all their data. But for now, we can celebrate with them.

Would it be right to say less is more, understand the actual needs, and keep it simple where possible?

Yeah, I think so, 100%. And again, I want to be clear about how hard this is. When I was working at CoreOS, not too far from here, I remember I was writing Golang and we built this thing called the “container network interface”. If you've heard of CNI, me and one other person at CoreOS worked on the initial version. And we wrote everything in Go. We had this kind of company philosophy. Everything will be written in Go unless you're working on the operating system.

I'm writing this network interface in Golang. And I remember, during runtime, if you are using something like Golang to make a system call, and if the kernel context-switches you, your system call can end up on a different thread. And bad things happen when this happens.

And so I'm like, “Hey, there's this bug.”

And the guy next to me named Eugene, he's a C/C++ programmer, and he's like, "I think Go has a bug."

I said, "You mean Go, the programming language? The runtime has a bug?" I was like, dude, just say you don't know Go code, and you're just learning; don't try to blame it on the runtime.

And lo and behold, right in front of me, he dumps the assembly generated by the Go compiler, and he points out the bug. He's like, "Yeah, you can't do that. That's not how that's supposed to happen when you make system calls." He goes upstream to the Go repository.

I'm watching like, “I don't know, Rob Pike and guys are pretty smart.” But he flags the bug, and it turns out this has been a bug that has been plaguing Docker for a very long time and other applications written in Go. He knew a layer below me, the building blocks.

So I think it just reinforces that you must understand the fundamentals. And if you think you do, look one layer below. And there may be another set of fundamentals. And I know what you're thinking. Why do I need to learn all of this if the LLM will learn everything? I promise you, if you can't tell what good looks like, you will not be able to tell the difference in what response you're getting. I think there is some value in using tools like that as a shortcut, as an assistant. But if you can't tell what's good or right, how would you know whether we should be committing that to production or not? So I still think there's value in understanding these things.

On balancing fundamentals with industry expectations

Audience

You said earlier in your talk, do you need to rewrite the Fortran app in Rust? Only if you care about your LinkedIn. The fundamentals are what ultimately matter, but we're often forced to BS or keep up appearances in general in the industry, whether it's for RTO or if it's your LinkedIn profile, resume-driven development and whatnot. This is just something I've noticed more and more. Do you have any words of wisdom for how you balance what matters, what's real, the fundamentals, with the expectations of appearance that are often forced upon us?

Kelsey

Here's what I did. There's probably a thousand right answers depending on the context. I always tried to "show, don't tell". So people were getting super excited about something—I think as an engineer, your job is to take the new thing people are super excited about that they may or may not understand, your job is to make sure that you're not overly biased, that you're not the one that's incorrect, and you pull it down and you try to use it for that context. If you have a better, simpler way, or an existing solution; I'm going to show you one thing that came to mind when I saw this.

As a Distinguished Engineer, I work with lots of engineering teams. And I remember, I was sitting in the San Jose airport because I had just met with a team that was overengineering everything. I was like, "Can you just stop typing for five minutes? What you have already worked on is fine."

So I sat in the airport, and I made a new framework. And that framework is called “No code”. It has like 63 stars. And it's a really dope framework, by the way. It's the best way to write secure and reliable applications—write nothing, deploy anywhere. Like, I'm making bold claims. And so when you look at it, it's like the best way to write everything. Here's how you get started. You get started by writing no code at all. And in order to do this, you have to understand what existing solutions are out there in the world. If you see someone implementing, like, proxy features, you say, “Hey, there's this thing called HAProxy. You can put it there, and it does all of these things that you have on the roadmap. Please stop."

To add more features is easy. You can build the application. I mean, this thing. It's ready for any code generation that's out there. Like, you don't even need an LLM to generate this code. If you think it, it comes to life. And then someone asked me about contribution. You don't. But people kept trying, so I wrote a contribution guide for “No code”. And it's really simple. All changes are welcome as long as no code is involved. If you run into any bugs, please file an issue. And explain how that was even possible.

If you're really bored at work, you should go look at some of the open issues. I had engineering directors at various companies ask me to take this repository down because of all the distractions it's been causing their team. So please, let's add your company to the list of requests to take it down.