HAProxy Kubernetes Ingress Controller 1.10 is now the latest version. Learn more

We’re proud to announce the release of version 1.7 of the HAProxy Kubernetes Ingress Controller!

In this release, we added support for custom resource definitions that cover most of the configuration settings. Definitions are available for the global, defaults, and backend sections of the configuration. This promotes a cleaner separation of concerns between the different groups of settings and strengthens the validation of those settings. It also makes it easier to deprecate features in the future and allows multiple versions of the custom resources to coexist within the same cluster.

Also, Docker images for multiple architectures, including Arm, are now available, expanding support for running the ingress controller in Kubernetes on various host operating systems. You can also download the ingress controller as a binary from the project’s GitHub Releases page, which is needed for external mode. The ingress controller version 1.7 is built with the latest release of HAProxy, version 2.4.

In this blog post, you will learn more about the changes in this version.

Custom Resource Definitions

Since its inception, the HAProxy Kubernetes Ingress Controller has relied heavily on annotations to tune the behavior of the underlying HAProxy load balancer. It supports annotations on the Service and Ingress resource types for per-application settings and similar key-value fields in a ConfigMap for configuring global settings.

For those of you not familiar with them, annotations are key-value settings that you can add to the metadata section of a Kubernetes object. For example, to enable HTTP health checking of the URL path /health, you would add the haproxy.org/check and haproxy.org/check-http annotations to the metadata section of your Service definition:

| apiVersion: v1 | |

| kind: Service | |

| metadata: | |

| labels: | |

| run: web | |

| name: web | |

| annotations: | |

| haproxy.org/check: "true" | |

| haproxy.org/check-http: "/health" |

When Kubernetes introduced Custom Resource Definition (CRD), we realized this alternative had a lot to offer our controller. First, CRDs facilitate a cleaner separation of concerns between the different parts of the ingress controller’s configuration; a single CRD can handle all of the global settings, for example. Also, it keeps native Kubernetes resources free of annotations and centralizes configuration settings in one place.

The latter point has many advantages on the technical side. If we exclude the exceptional case of dependent CRDs, a custom resource aggregates settings into one location. That means that when a user submits one of the custom resources, it triggers a single event in the controller’s events loop. In contrast, annotations are scattered across the watched resources and they trigger many events in the code. Custom resources clearly improve the atomicity of how we detect and handle events.

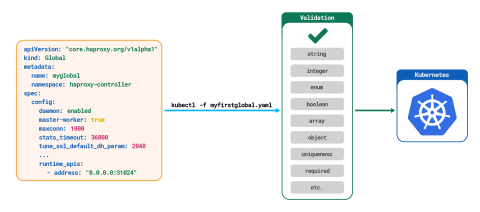

Custom resources also benefit from having better validation. They allows us to specify data types for each setting (e.g. strings, integers, enums, booleans, arrays, and objects) and validate that the submitted value matches. We can also validate other attributes of the CRD’s OpenAPI schema, such as checking whether required fields have been set and perform those checks early on when the user submits the CRD.

Checking that the CRD is coherent as a whole is also made simpler by the all-in-one-place nature of custom resources. We can more easily check fields and their coherency with other, related fields at runtime, in controllers or admission controllers, since the desired state is totally searchable. The illustration below shows the extra validation we can do with a CRD compared to a ConfigMap object.

ConfigMap validation

Global CRD validation

Third, different versions of a CRD can cohabitate, which is much more difficult to achieve with annotations. Also, deprecating older settings is more manageable. Last but not least, custom resources are first-class citizens at the same level of native Kubernetes resources, with the capacity to be added and removed from the cluster using the same tools.

These observations clearly advocate for adopting CRDs in the HAProxy Kubernetes Ingress Controller. With this release, we offer custom resources for the global, default, and backend sections of HAProxy. Please note that you’re not required to switch to using CRDs. Your ingress controller will still function without them.

Now, let’s see some examples of the new HAProxy custom resources, for illustration purposes.

If you’re interested in learning more, check out Kubernetes Ingress Controller Documentation.

CRD Examples

When you start version 1.7 of the HAProxy Kubernetes Ingress Controller, the logs will display this message:

Custom API core.haproxy.org not available in cluster.

This indicates two things: You have no HAProxy CRD present in your cluster and you’re still using annotations for globals and defaults management. To install the definitions of globals and defaults into your cluster, run the following kubectl apply commands:

| $ kubectl apply -f https://raw.githubusercontent.com/haproxytech/kubernetes-ingress/8161347cbcb400c09b51c4e161ca5d64a9989d03/crs/definition/defaults.yaml | |

| $ kubectl apply -f https://raw.githubusercontent.com/haproxytech/kubernetes-ingress/8161347cbcb400c09b51c4e161ca5d64a9989d03/crs/definition/global.yaml | |

| $ kubectl apply -f https://raw.githubusercontent.com/haproxytech/kubernetes-ingress/ab56e15cbd28eb8958527e4b7a0fb8910be2b0a4/crs/definition/backend.yaml |

This has two effects. The previous warning message disappears and now global and default annotations are disabled in favor of custom resources. You can check for the presence of the CRDs with the kubectl get crd command.

| $ kubectl get crd | |

| NAME CREATED AT | |

| defaults.core.haproxy.org xxxxxxxxxxx | |

| globals.core.haproxy.org xxxxxxxxxxx |

Globals

Let’s create a simplified global custom resource. Start by creating a YAML file named myglobal.yaml and copy the following contents into it (change the namespace if needed):

| apiVersion: "core.haproxy.org/v1alpha1" | |

| kind: Global | |

| metadata: | |

| name: myglobal | |

| namespace: default | |

| spec: | |

| config: | |

| maxconn: 1000 | |

| stats_timeout: 36000 | |

| tune_ssl_default_dh_param: 2048 | |

| ssl_default_bind_options: "no-sslv3 no-tls-tickets no-tlsv10" | |

| ssl_default_bind_ciphers: ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-AES256-GCM-SHA384:DHE-RSA-AES128-GCM-SHA256:DHE-DSS-AES128-GCM-SHA256:kEDH+AESGCM:ECDHE-RSA-AES128-SHA256:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA:ECDHE-ECDSA-AES128-SHA:ECDHE-RSA-AES256-SHA384:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA:ECDHE-ECDSA-AES256-SHA:DHE-RSA-AES128-SHA256:DHE-RSA-AES128-SHA:DHE-DSS-AES128-SHA256:DHE-RSA-AES256-SHA256:DHE-DSS-AES256-SHA:DHE-RSA-AES256-SHA:!aNULL:!eNULL:!EXPORT:!DES:!RC4:!3DES:!MD5:!PSK | |

| hard_stop_after: 30000 | |

| server_state_base: /tmp/haproxy-ingress/state | |

| runtime_apis: | |

| - address: "0.0.0.0:31024" |

Then submit it with the kubectl apply command:

| $ kubectl apply -f myglobal.yaml |

Next, to ensure that the ingress controller uses only a single object for global settings, update the controller’s ConfigMap object so that it sets the cr-global field to the location of your CRD:

| apiVersion: v1 | |

| kind: ConfigMap | |

| metadata: | |

| name: kubernetes-ingress | |

| namespace: default | |

| data: | |

| cr-global: default/myglobal |

If you were to get a shell prompt into the running ingress controller container, you would see in the resulting HAProxy configuration file something similar to this global section:

| global | |

| daemon | |

| localpeer local | |

| master-worker | |

| maxconn 1000 | |

| pidfile /tmp/haproxy-ingress/run/haproxy.pid | |

| stats socket 0.0.0.0:31024 | |

| stats socket /tmp/haproxy-ingress/run/haproxy-runtime-api.sock expose-fd listeners level admin | |

| stats timeout 36000 | |

| tune.ssl.default-dh-param 2048 | |

| ssl-default-bind-options no-sslv3 no-tls-tickets no-tlsv10 | |

| ssl-default-bind-ciphers ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-AES256-GCM-SHA384:DHE-RSA-AES128-GCM-SHA256:DHE-DSS-AES128-GCM-SHA256:kEDH+AESGCM:ECDHE-RSA-AES128-SHA256:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA:ECDHE-ECDSA-AES128-SHA:ECDHE-RSA-AES256-SHA384:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA:ECDHE-ECDSA-AES256-SHA:DHE-RSA-AES128-SHA256:DHE-RSA-AES128-SHA:DHE-DSS-AES128-SHA256:DHE-RSA-AES256-SHA256:DHE-DSS-AES256-SHA:DHE-RSA-AES256-SHA:!aNULL:!eNULL:!EXPORT:!DES:!RC4:!3DES:!MD5:!PSK | |

| hard-stop-after 30000 | |

| server-state-file global | |

| server-state-base /tmp/haproxy-ingress/state |

The table below lists the fields that you can configure in a global custom resource.

chroot | group | hard_stop_after |

nbproc | ssl_default_bind_ciphersuites | log_send_hostname |

maxconn | server_state_base | tune_ssl_default_dh_param |

nbthread | ssl_default_server_options | ssl_default_server_ciphers |

cpu_maps | runtime_apis | stats_timeout |

user | ssl_default_bind_ciphers | ssl_default_bind_options |

external_check | ssl_default_server_ciphersuites | ssl_mode_async |

lua_loads |

The mapping to the HAProxy configuration is straightforward, as you can see in the example. You can count in this table 22 fields, with many of them introducing new capabilities that were not available as annotations before. Note that global-config-snippet, which let you define a raw chunk of configuration markup to be inserted into the HAProxy configuration file, is not supported in the global CRD since all settings are now individually configurable. If you have a global-config-snippet, then translate it into the equivalent fields in your global custom resource.

Defaults

Having more than 50 entries in the defaults CRD permits a complete configuration of the HAProxy defaults section. For this example, create the file mydefaults.yaml with the following contents (change the namespace if needed):

| apiVersion: "core.haproxy.org/v1alpha1" | |

| kind: Defaults | |

| metadata: | |

| name: mydefaults | |

| namespace: default | |

| spec: | |

| config: | |

| log_format: "'%ci:%cp [%tr] %ft %b/%s %TR/%Tw/%Tc/%Tr/%Ta %ST %B %CC %CS %tsc %ac/%fc/%bc/%sc/%rc %sq/%bq %hr %hs \"%HM %[var(txn.base)] %HV\"'" | |

| redispatch: | |

| enabled: enabled | |

| interval: 0 | |

| dontlognull: enabled | |

| http_connection_mode: http-keep-alive | |

| http_request_timeout: 5000 | |

| connect_timeout: 5000 | |

| client_timeout: 50000 | |

| queue_timeout: 5000 | |

| server_timeout: 50000 | |

| tunnel_timeout: 3600000 | |

| http_keep_alive_timeout: 60000 |

Then apply the configuration to the cluster:

| $ kubectl apply -f mydefaults.yml |

For the exact same reason as with global, you specify the defaults custom resource instance you want to apply with an additional field in your configmap:

| apiVersion: v1 | |

| kind: ConfigMap | |

| metadata: | |

| name: kubernetes-ingress | |

| namespace: default | |

| data: | |

| cr-global: default/myglobal | |

| cr-defaults: default/mydefaults |

This example produces the following defaults section in the HAProxy configuration:

| defaults | |

| log global | |

| log-format '%ci:%cp [%tr] %ft %b/%s %TR/%Tw/%Tc/%Tr/%Ta %ST %B %CC %CS %tsc %ac/%fc/%bc/%sc/%rc %sq/%bq %hr %hs "%HM %[var(txn.base)] %HV"' | |

| option redispatch 0 | |

| option dontlognull | |

| option http-keep-alive | |

| timeout http-request 5000 | |

| timeout connect 5000 | |

| timeout client 50000 | |

| timeout queue 5000 | |

| timeout server 50000 | |

| timeout tunnel 3600000 | |

| timeout http-keep-alive 60000 | |

| load-server-state-from-file global |

Backends

A backend CRD completes this first batch of newly introduced CRDs. There are simply too many possibilities with this CRD to provide a comprehensive description. We strongly encourage you to browse the definition to get an idea of all these possibilities.

How does it work? You create deployments, services, and ingresses exactly as before, but now you can add the cr-backend annotation to services, ingresses, or to the global ConfigMap in order to use your backend custom resource. The ingress controller uses the first backend CRD it finds in the following order: ConfigMap, Ingress, and then Service.

The backend custom resource lets you customize the backend configuration. In this simple example, we’ll simply change the balance algorithm of the default backend. Create a file named mydefaultbackend.yaml with the following contents (rename the namespace if required) :

| apiVersion: "core.haproxy.org/v1alpha1" | |

| kind: Backend | |

| metadata: | |

| name: kubernetes-ingress-default-backend | |

| namespace: default | |

| spec: | |

| config: | |

| balance: | |

| algorithm: "leastconn" |

As usual, we apply it to the cluster with:

| $ kubectl apply -f mydefaultbackend.yaml |

In our cluster, the default backend service is default/kubernetes-ingress-default-backend. Add the following cr-backend annotation to your ConfigMap to attach this CRD to the default backend service:

| apiVersion: v1 | |

| kind: ConfigMap | |

| metadata: | |

| name: kubernetes-ingress | |

| namespace: default | |

| data: | |

| cr-global: default/myglobal | |

| cr-defaults: default/mydefaults | |

| cr-backend: default/defaultbackend |

The backend in the HAProxy configuration then has a modified load balancing algorithm:

| backend default-kubernetes-ingress-default-backend-http | |

| mode http | |

| balance leastconn | |

| option forwardfor | |

| server SRV_1 172.17.0.3:8080 check weight 128 | |

| server SRV_2 172.17.0.5:8080 check weight 128 | |

| server SRV_3 127.0.0.1:8080 disabled check weight 128 |

Distribution of Connections to Services/Pods

Let’s cover some of the other updates in the release.

The pod-maxconn annotation, which limits the maximum number of connections that the ingress controller will send to a pod, now factors in the number of connections coming through all working instances of the ingress controller, making the setting accurate across your cluster. This allows users to define precisely what amount of connections should reach the application services/pods. The number of running ingress controllers now does not impact this, which is especially important when services and their pods are highly sensitive to the number of connections they can receive.

The diagram below illustrates how the maxconn value is divided among multiple instances of the ingress controller.

maxconn with one ingress controller

maxconn with two ingress controllers

New ALNP Option

A new option, tls-alpn, is available for the ConfigMap resource. This allows you to set what the ALPN advertisement is when TLS is enabled. Its default value when not set remained the same: h2,http/1.1, which means prefers HTTP/2 but fall back to HTTP/1.1. For example, with this option, you can disable HTTP/2. Simply use the value http/1.1 for this setting.

Implementation of Specific Path Types in Ingress Rules

The ingress controller supports all currently available versions of the Ingress API:

extensions/v1beta1 (Required for Kubernetes < 1.14)

networking.k8s.io/v1beta1 (Kubernetes < 1.19)

networking.k8s.io/v1

While they share a lot of similar structure, some differences exist when adding the PathType attribute to an Ingress definition. To correctly handle all implementations, the path type is treated according to two rules: an unknown path type will be rejected and an empty path type with the v1beta1 API version will be set to ImplementationSpecific. This allows us to preserve behaviour for older resources, while at the same time use the new attributes that were introduced.

Multiarch Support

People run Kubernetes on many different platforms, and many of them are beginning to be quite popular. Recall that the processor architecture that Docker container targets must match the host operating system on which it runs. We have expanded availability to the Arm platform and now the ingress controller Docker image is available on these platforms:

linux/386

linux/amd64

linux/arm/v6

linux/arm/v7

linux/arm64

Images on all platforms are pulled the same:

| $ docker pull haproxytech/kubernetes-ingress:<tag> |

Here, a tag is the desired version of the controller that you want to install.

s6 Init System

We now use s6 overlay as a process supervisor inside the Docker container. The Docker image runs two processes simultaneously: the HAProxy load balancer and the ingress controller service; s6 allows it to do this in a more robust way. The s6-overlay provides proper PID 1 functionality so our services can concentrate on what they need to do.

Nightly Builds

For users eager to try the latest features that won’t be available until the next release, nightly builds create Docker images that can be pulled with the docker pull command:

| $ docker pull haproxytech/kubernetes-ingress:nightly |

Or, edit the ingress controller’s deployment YAML file to use the nightly tag. A YAML file exists for deploying a Deployment or DaemonSet to your cluster.

External Mode

Beyond deploying the ingress controller as a Docker container, with every release binaries for 12 different platforms are immediately available for download. This is needed in order to use the controller in external (out of cluster) mode.

These binaries are built automatically with goreleaser and are available on the project’s Github Releases page.

Contributions

We’d like to thank the code contributors who helped make this version possible!

Moemen MHEDHBI | CLEANUP BUILD DOC BUG FEATURE TEST REORG OPTIM |

Ivan Matmati | FEATURE DOC BUG |

Zlatko Bratkovic | CLEANUP FEATURE DOC BUG TEST REORG BUILD |

Dinko Korunic | FEATURE BUILD |

Robert Maticevic | BUILD |

Nick Ramirez | DOC |

Cristian Aldea | DOC FEATURE |

Anton Troyanov | FEATURE |

Fabiano Parente | BUG |

Pasi Tuominen | BUG FEATURE |

Kevin Ard | BUG |

Toshokan | BUG |

Conclusion

The biggest update is the introduction of CRDs. Version 1.7 uses the power of custom resource definitions to bring you more access to the features present in the underlying HAProxy engine. CRDs support better validation and control, even before committing changes to your Kubernetes cluster. These features strengthen the flexibility and security of your ingress solution.

Interested in learning more about the HAProxy Kubernetes Ingress Controller? Subscribe to our blog!

Subscribe to our blog. Get the latest release updates, tutorials, and deep-dives from HAProxy experts.