The new HAProxy Kubernetes Ingress Controller provides a high-performance ingress for your Kubernetes-hosted applications.

Containers allow cross-functional teams to share a consistent view of an application as it flows through the engineering, quality assurance, deployment, and support phases. The Docker CLI gives you a common interface through which you can package, version, and run your services. What’s more, with orchestration platforms like Kubernetes, the same tools can be leveraged to manage services across isolated Dev, Staging, and Production environments.

This technology is especially convenient for teams working with a microservices architecture, which produces many small but specialized services.

However, the challenge is: How do you expose your containerized services outside the container network?

In this blog post, you’ll learn about the new HAProxy Kubernetes Ingress Controller, which is built upon HAProxy, the world’s fastest and most widely used load balancer. As you’ll see, using an ingress controller solves several tricky problems and provides an efficient, cost-effective way to route requests to your containers. Having HAProxy as the engine gives you access to many advanced features you know and love.

Register for the on-demand webinar “The HAProxy Kubernetes Ingress Controller for High-Performance Ingress”.

Kubernetes Basics

First, let’s review the common approaches to routing external traffic to a pod in Kubernetes.

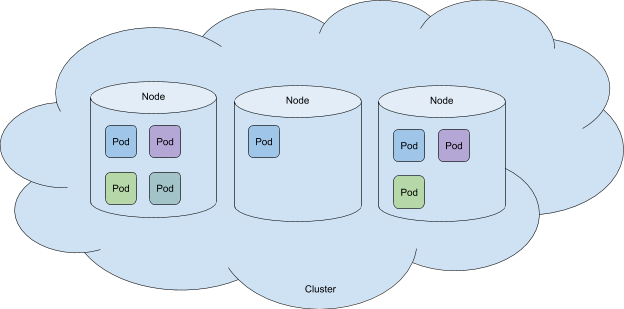

Since there are many, thorough explanations available online that describe Kubernetes in general, we will skip the basics. In essence, Kubernetes consists of physical or virtual machines—called nodes—that together form a cluster. Within the cluster, Kubernetes deploys pods. Each pod wraps a container (or more than one container) and represents a service that runs in Kubernetes. Pods can be created and destroyed as needed.

A Kubernetes cluster

To maintain the desired state of the cluster, such as which containers and how many should be deployed at a given time, we have additional objects in Kubernetes. These include ReplicaSets, StatefulSets, Deployments, DaemonSets, and more. For our current discussion, let’s skip ahead and get to the meat of the topic: accessing pods via Services and Controllers.

Services

A service is an abstraction that allows you to connect to pods in a container network without needing to know a pod’s location (i.e. which node is it running on?) or to be concerned about a pod’s lifecycle. The service also allows you to direct external traffic to pods. Essentially, it’s a primitive sort of reverse proxy. However, the mechanics that determine how traffic is routed depend on the service’s type, of which there are four options:

ClusterIP

ExternalName

NodePort

LoadBalancer

When using Kubernetes services, each type has its pros and cons. We won’t discuss ClusterIP because it doesn’t allow for external traffic to reach the service—only traffic that originates within the cluster. ExternalName is used to route to services running outside of Kubernetes, so we won’t cover it either. That leaves the NodePort and LoadBalancer types.

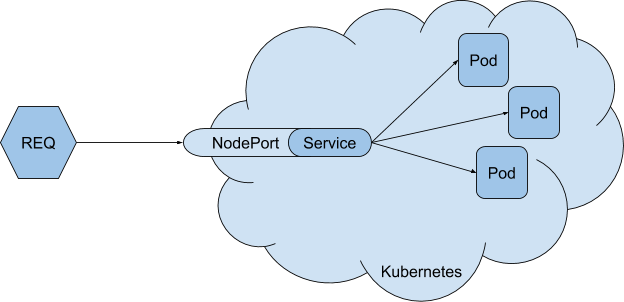

#1 NodePort

When you set a service’s type to NodePort, that service begins listening on a static port on every node in the cluster. So, you’ll be able to reach the service via any node’s IP and the assigned port. Internally, Kubernetes does this by using L4 routing rules and Linux IPTables.

Routing via NodePort

While this is the simplest solution, it can be inefficient and doesn’t provide the benefits of L7 routing. It also requires downstream clients to be aware of your nodes’ IP addresses since they will need to connect to those addresses directly. In other words, they won’t be able to connect to a single, proxied IP address.

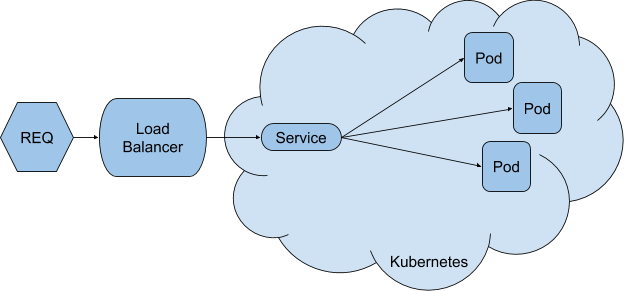

#2 LoadBalancer

When you set a service type to LoadBalancer, it exposes the service externally. However, to use it, you need to have an external load balancer. The external load balancer needs to be connected to the internal Kubernetes network on one end and opened to public-facing traffic on the other in order to route incoming requests. Due to the dynamic nature of pod lifecycles, keeping an external load balancer configuration valid is a complex task, but this does allow L7 routing.

Routing via LoadBalancer

Oftentimes, when using Kubernetes with a platform-as-a-service, such as with AWS’s EKS, Google’s GKE, or Azure’s AKS, the load balancer you get is automatic. It’s the cloud provider’s load balancer solution. If you create multiple Service objects, which is common, you’ll create a hosted load balancer for each one. This can be expensive in terms of resources. You also lose the ability to choose your own preferred load balancer technology.

There needed to be a better way. The limited and potentially costly methods for exposing Kubernetes services to external traffic led to the invention of Ingress objects and Ingress Controllers.

Controllers

The official definition of a controller, not specific to ingress controllers, is:

a control loop that watches the cluster's shared state through the apiserver and makes changes attempting to move the current state towards the desired state.

For example, a Deployment is a type of controller used to manage a set of pods. It is responsible for replicating and scaling applications. It watches the state of the cluster in a continuous loop. If you manually kill a pod, the Deployment object will take notice and immediately spin up a new one to keep the configured number of pods active and stable.

Other types of controllers manage functions related to persistent storage, service accounts, resource quotas, and cronjobs. So, in general, controllers are the watchers, ensuring that the system remains consistent. An Ingress Controller fits right in. It watches for new services within the cluster and is able to create routing rules for them dynamically.

Ingress

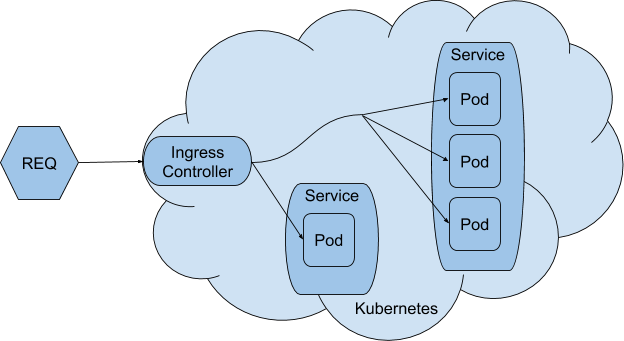

An Ingress object is an independent resource, apart from Service objects, that configures external access to a service’s pods. This means you can define the Ingress later, after the Service has been deployed, to hook it up to external traffic. That is convenient because you can isolate service definitions from the logic of how clients connect to them. This approach gives you the most flexibility.

L7 routing is one of the core features of Ingress, allowing incoming requests to be routed to the exact pods that can serve them based on HTTP characteristics, such as the requested URL path. Other features include terminating TLS, using multiple domains, and, most importantly, load balancing traffic.

Routing via Ingress

In order for Ingress objects to be usable, you must have an Ingress Controller deployed within your cluster that implements the Ingress rules as they are detected. Like other types of controllers, an Ingress Controller continuously watches for changes. Since pods in Kubernetes have arbitrary IPs and ports, it is the responsibility of an Ingress Controller to hide all internal networking from you, the operator. You only need to define which route is designated to service, and the system will handle making the changes happen.

It’s important to note that Ingress Controllers still need a way to receive external traffic. This can be done by exposing the Ingress Controller as a Kubernetes service with either type NodePort or LoadBalancer. However, this time, when you add an external load balancer, it will only be for one service and the external load balancer’s configuration can be more static.

HAProxy Ingress Controller

Best known for reliability and performance, among other features, HAProxy fits all the needs required for an Ingress Controller. In Kubernetes, high amounts of traffic are expected, and the environment is highly dynamic because of the high number of pods creation, deletion, and relocations that is typical. HAProxy, with its Runtime API, Data Plane API, and hitless reloads, excels in such an environment.

Using HAProxy, there is no real difference in how requests are load balanced from a traditional standpoint. Configuration is a matter of using the ability of a controller to fetch all the required data from the Kubernetes API and fill it into HAProxy. The most demanding part is syncing the status of pods since the environment is highly dynamic, and pods can be created or destroyed at any time. The controller feeds those changes directly to HAProxy via the HAProxy Native Golang Client library, which wraps the Runtime API and Data Plane API functionality.

You can fine-tune the Ingress Controller with a ConfigMap resource and/or annotations on the Ingress object. This allows you to decouple proxy configuration from services and keep everything more portable.

Installing the HAProxy Ingress Controller

Installing the controller is easy. You can use Helm to deploy it to your cluster. Learn more in our blog post, Use Helm to Install the HAProxy Kubernetes Ingress Controller. The other way to install it is to execute kubectl apply with the path to the installation YAML file, as shown:

| kubectl apply -f https://raw.githubusercontent.com/haproxytech/kubernetes-ingress/master/deploy/haproxy-ingress.yaml |

To demonstrate how everything works, we will dissect this YAML file and step through the objects that are included. The Helm chart is also based on this file.

Dissecting the YAML File

In this section, we’ll deconstruct the haproxy-ingress.yaml file and explain what it is doing. It creates the following resources in your Kubernetes cluster:

A Namespace in which to place the resources

A ServiceAccount with which to run the Ingress Controller

A ClusterRole to define permissions

A ClusterRoleBinding to associate the ClusterRole with the service account

A default service to handle all unrouted traffic and return 404

A Deployment to deploy the Ingress Controller pod

A Service to allow traffic to reach the Ingress Controller

The first thing that the YAML file does is create a namespace in which to place the resources. This avoids cluttering up the default namespace and keeps objects that are related to the ingress controller neatly in one place. It also creates a service account with which to run the controller.

| apiVersion: v1 | |

| kind: Namespace | |

| metadata: | |

| name: haproxy-controller | |

| --- | |

| apiVersion: v1 | |

| kind: ServiceAccount | |

| metadata: | |

| name: haproxy-ingress-service-account | |

| namespace: haproxy-controller |

Since most Kubernetes deployments use role-based access control (RBAC), granting the necessary permissions to the service account is done by using a ClusterRole and ClusterRoleBinding resource. This allows the controller to watch the cluster for changes so that it can update the underlying HAProxy configuration.

| kind: ClusterRole | |

| apiVersion: rbac.authorization.k8s.io/v1 | |

| metadata: | |

| name: haproxy-ingress-cluster-role | |

| rules: | |

| - apiGroups: | |

| - "" | |

| resources: | |

| - configmaps | |

| - endpoints | |

| - nodes | |

| - pods | |

| - services | |

| - namespaces | |

| - events | |

| - serviceaccounts | |

| verbs: | |

| - get | |

| - list | |

| - watch | |

| - apiGroups: | |

| - "extensions" | |

| resources: | |

| - ingresses | |

| - ingresses/status | |

| verbs: | |

| - get | |

| - list | |

| - watch | |

| - apiGroups: | |

| - "" | |

| resources: | |

| - secrets | |

| verbs: | |

| - get | |

| - list | |

| - watch | |

| - create | |

| - patch | |

| - update | |

| - apiGroups: | |

| - extensions | |

| resources: | |

| - ingresses | |

| verbs: | |

| - get | |

| - list | |

| - watch | |

| --- | |

| kind: ClusterRoleBinding | |

| apiVersion: rbac.authorization.k8s.io/v1 | |

| metadata: | |

| name: haproxy-ingress-cluster-role-binding | |

| namespace: haproxy-controller | |

| roleRef: | |

| apiGroup: rbac.authorization.k8s.io | |

| kind: ClusterRole | |

| name: haproxy-ingress-cluster-role | |

| subjects: | |

| - kind: ServiceAccount | |

| name: haproxy-ingress-service-account | |

| namespace: haproxy-controller |

When there is no Ingress that matches an incoming request, we want to return a 404 page. Default service is deployed to handle the traffic that isn’t picked up by any routing rule. Later, when the controller is defined, an argument named --default-backend-service is specified that points to this service.

| apiVersion: apps/v1 | |

| kind: Deployment | |

| metadata: | |

| labels: | |

| run: ingress-default-backend | |

| name: ingress-default-backend | |

| namespace: haproxy-controller | |

| spec: | |

| replicas: 1 | |

| selector: | |

| matchLabels: | |

| run: ingress-default-backend | |

| template: | |

| metadata: | |

| labels: | |

| run: ingress-default-backend | |

| spec: | |

| containers: | |

| - name: ingress-default-backend | |

| image: gcr.io/google_containers/defaultbackend:1.0 | |

| ports: | |

| - containerPort: 8080 | |

| --- | |

| apiVersion: v1 | |

| kind: Service | |

| metadata: | |

| labels: | |

| run: ingress-default-backend | |

| name: ingress-default-backend | |

| namespace: haproxy-controller | |

| spec: | |

| selector: | |

| run: ingress-default-backend | |

| ports: | |

| - name: port-1 | |

| port: 8080 | |

| protocol: TCP | |

| targetPort: 8080 |

Next, the Ingress Controller container is deployed. By default, one replica is created, but you can change this to run more instances of the controller within your cluster. Traffic is accepted on container ports 80 and 443, with the HAProxy Stats page and Prometheus metrics exposed on port 1024. There’s also an empty ConfigMap named haproxy-configmap, which is specified with the --configmap argument passed to the controller.

| apiVersion: v1 | |

| kind: ConfigMap | |

| metadata: | |

| name: haproxy-configmap | |

| namespace: default | |

| data: | |

| --- | |

| apiVersion: apps/v1 | |

| kind: Deployment | |

| metadata: | |

| labels: | |

| run: haproxy-ingress | |

| name: haproxy-ingress | |

| namespace: haproxy-controller | |

| spec: | |

| replicas: 1 | |

| selector: | |

| matchLabels: | |

| run: haproxy-ingress | |

| template: | |

| metadata: | |

| labels: | |

| run: haproxy-ingress | |

| spec: | |

| serviceAccountName: haproxy-ingress-service-account | |

| containers: | |

| - name: haproxy-ingress | |

| image: haproxytech/kubernetes-ingress | |

| Args: | |

| - --default-backend-service=haproxy-controller/ingress-default-backend | |

| - --default-ssl-certificate=default/tls-secret | |

| - --configmap=default/haproxy-configmap | |

| ports: | |

| - name: http | |

| containerPort: 80 | |

| - name: https | |

| containerPort: 443 | |

| - name: stat | |

| containerPort: 1024 | |

| env: | |

| - name: POD_NAME | |

| valueFrom: | |

| fieldRef: | |

| fieldPath: metadata.name | |

| - name: POD_NAMESPACE | |

| valueFrom: | |

| fieldRef: | |

| fieldPath: metadata.namespace |

Whether you want to configure the controller by adding your own ConfigMap resource to overwrite the empty one is up to you. Its name has to match what is set here. Other options include the location of TLS secrets and the name of the default backend service.

In order to allow client traffic to reach the Ingress Controller, a Service with type NodePort is added:

| apiVersion: v1 | |

| kind: Service | |

| metadata: | |

| labels: | |

| run: haproxy-ingress | |

| name: haproxy-ingress | |

| namespace: haproxy-controller | |

| spec: | |

| selector: | |

| run: haproxy-ingress | |

| type: NodePort | |

| ports: | |

| - name: http | |

| port: 80 | |

| protocol: TCP | |

| targetPort: 80 | |

| - name: https | |

| port: 443 | |

| protocol: TCP | |

| targetPort: 443 | |

| - name: stat | |

| port: 1024 | |

| protocol: TCP | |

| targetPort: 1024 |

Each container port is mapped to a NodePort port via the Service object. After installing the Ingress Controller using the provided YAML file, you can use kubectl get svc --namespace=haproxy-controller to see the ports that were mapped:

| kubectl get svc --namespace=haproxy-controller | |

| NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE | |

| haproxy-controller haproxy-ingress NodePort 10.96.15.205 <none> 80:30279/TCP,443:30775/TCP,1024:31912/TCP 84s |

In this instance, the following ports were mapped:

Container port 80 to NodePort 30279

Container port 443 to NodePort 30775

Container port 1024 to NodePort 31912

Adding an Ingress

Now that you have the Ingress Controller installed, it’s time to spin up a sample application and add an Ingress resource that will route traffic to your pod. You will create the following:

A Deployment for your pods

A Service for your pods

An Ingress that defines routing to your pods

A ConfigMap to configure the controller

Before setting up an application, you can see that the default service returns 404 Not Found responses for all routes. If you are using Minikube, you can get the IP of the cluster with the minikube ip command. Use curl to send a request with a Host header of foo.bar and get back a 404 response:

| $ curl -I -H 'Host: foo.bar' 'http://192.168.99.100:30279' | |

| HTTP/1.1 404 Not Found | |

| date: Thu, 27 Jun 2019 21:45:20 GMT | |

| content-length: 21 | |

| content-type: text/plain; charset=utf-8 |

Let’s launch a sample application. Prepare the Deployment, Service and Ingress objects by creating a file named 1.ingress.yaml and adding the following markup:

| apiVersion: apps/v1 | |

| kind: Deployment | |

| metadata: | |

| labels: | |

| run: app | |

| name: app | |

| spec: | |

| replicas: 2 | |

| selector: | |

| matchLabels: | |

| run: app | |

| template: | |

| metadata: | |

| labels: | |

| run: app | |

| spec: | |

| containers: | |

| - name: app | |

| image: jmalloc/echo-server | |

| ports: | |

| - containerPort: 8080 | |

| --- | |

| apiVersion: v1 | |

| kind: Service | |

| metadata: | |

| labels: | |

| run: app | |

| name: app | |

| annotations: | |

| haproxy.org/check: "enabled" | |

| haproxy.org/forwarded-for: "enabled" | |

| haproxy.org/load-balance: "roundrobin" | |

| spec: | |

| selector: | |

| run: app | |

| ports: | |

| - name: port-1 | |

| port: 80 | |

| protocol: TCP | |

| targetPort: 8080 | |

| --- | |

| apiVersion: networking.k8s.io/v1beta1 | |

| kind: Ingress | |

| metadata: | |

| name: web-ingress | |

| namespace: default | |

| spec: | |

| rules: | |

| - host: foo.bar | |

| http: | |

| paths: | |

| - path: / | |

| backend: | |

| serviceName: app | |

| servicePort: 80 |

Apply the configuration with the kubectl apply command:

| $ kubectl apply -f 1.ingress.yaml |

This will allow all traffic for http://foo.bar/ to go to our web service. Next, define a ConfigMap to tune other settings. Add a file named 2.configmap.yaml with the following markup:

| apiVersion: v1 | |

| kind: ConfigMap | |

| metadata: | |

| name: haproxy-configmap | |

| namespace: default | |

| data: | |

| servers-increment: "42" | |

| ssl-redirect: "OFF" |

Within this ConfigMap, which we’ve left mostly empty except for the server-increment and ssl-redirect settings, you can define many options, including timeouts, whitelisting, and certificate handling. Nearly all settings can be defined here, but some can also be configured by using annotations on the Ingress resource.

Apply this configuration with the kubectl apply command:

| $ kubectl apply -f 2.configmap.yaml |

You can set fine-grained options to allow different setups globally, for certain ingress objects, or even for only one service. That is helpful, for instance, if you want to limit access for some services, such as for a new application that is still a work-in-progress and needs to be whitelisted so that it can be accessed only by developers’ IP addresses.

Use curl again, and it will return a successful response:

| $ curl -I -H 'Host: foo.bar' 'http://192.168.99.100:30279' | |

| HTTP/1.1 200 OK | |

| content-type: text/plain | |

| date: Thu, 27 Jun 2019 21:46:30 GMT | |

| content-length: 136 |

Conclusion

In this blog, you learned how to route traffic to pods using the HAProxy Ingress Controller in Kubernetes. With all of the powerful benefits HAProxy offers, you don’t have to settle for the cost and limited features tied to a cloud provider’s load balancer. Try it out the next time you need to expose a service to public-facing traffic.

If you enjoyed this article and want to keep up to date on similar topics, subscribe to this blog.

HAProxy Enterprise includes a robust and cutting-edge codebase, an enterprise suite of add-ons, expert support, and professional services. Contact us to learn more and sign up for an HAProxy Enterprise free trial.

Subscribe to our blog. Get the latest release updates, tutorials, and deep-dives from HAProxy experts.